This patch refactors the generate_test_report script, namely turning it

into a proper library, and pulling the script/unittests out into

separate files, as is standard with most python scripts. The main

purpose of this is to enable reusing the library for the new Github

premerge.

Reviewers: tstellar, DavidSpickett, Keenuts, lnihlen

Reviewed By: DavidSpickett

Pull Request: https://github.com/llvm/llvm-project/pull/133196

The production buildbot master apparently has not yet been restarted

since https://github.com/llvm/llvm-zorg/pull/393 landed.

This reverts commit 96d1baedefc3581b53bc4389bb171760bec6f191.

Remove the FLANG_INCLUDE_RUNTIME option which was replaced by

LLVM_ENABLE_RUNTIMES=flang-rt.

The FLANG_INCLUDE_RUNTIME option was added in #122336 which disables the

non-runtimes build instructions for the Flang runtime so they do not

conflict with the LLVM_ENABLE_RUNTIMES=flang-rt option added in #110217.

In order to not maintain multiple build instructions for the same thing,

this PR completely removes the old build instructions (effectively

forcing FLANG_INCLUDE_RUNTIME=OFF).

As per discussion in

https://discourse.llvm.org/t/buildbot-changes-with-llvm-enable-runtimes-flang-rt/83571/2

we now implicitly add LLVM_ENABLE_RUNTIMES=flang-rt whenever Flang is

compiled in a bootstrapping (non-standalone) build. Because it is

possible to build Flang-RT separately, this behavior can be disabled

using `-DFLANG_ENABLE_FLANG_RT=OFF`. Also see the discussion an

implicitly adding runtimes/projects in #123964.

Clang is required to compile Flang. Instead of erroring-out if Clang is

enabled, for convinience implicitly add it to `LLVM_ENABLE_PROJECTS`,

consistent with how the MLIR dependency is handled.

This is motivatated by the discussion on whether flang-rt shoud be enabled implicitly

(https://discourse.llvm.org/t/buildbot-changes-with-llvm-enable-runtimes-flang-rt/83571/2).

Since the answer was yet, clang would have been the only exception of not being

enabled implicitly. Fixed with this commit.

Summary:

There were a few issues with the first one, leading to some errors and

warnings. Most importantly, this was building on MSVC which isn't

supported.

Summary:

These tools `amdhsa-loader` and `nvptx-loader` are used to execute unit

tests directly on the GPU. We use this for `libc` and `libcxx` unit

tests as well as general GPU experimentation. It looks like this.

```console

> clang++ main.cpp --target=amdgcn-amd-amdhsa -mcpu=native -flto -lc ./lib/amdgcn-amd-amdhsa/crt1.o

> llvm-gpu-loader a.out

Hello World!

```

Currently these are a part of the `libc` project, but this creates

issues as `libc` itself depends on them to run tests. Right now we get

around this by force-including the `libc` project prior to running the

runtimes build so that this dependency can be built first. We should

instead just make this a simple LLVM tool so it's always available.

This has the effect of installing these by default now instead of just

when `libc` was enabled, but they should be relatively small. Right now

this only supports a 'static' configuration. That is, we locate the CUDA

and HSA dependencies at LLVM compile time. In the future we should be

able to provide this by default using `dlopen` and some API.

I don't know if it's required to reformat all of these names since they

used the `libc` naming convention so I just left it for now.

Key Instructions will start development behind a compile time flag to avoid

passing on the increased memory usage to all debug builds. We're working on

improving DILocation memory characteristics simultaneously; once that work lands

we can remove `EXPERIMENTAL_KEY_INSTRUCTIONS`.

This patch doesn't add any code, it's just so we can get the SIE buildbot

building with the new option right away.

When building in tree clang without having `-pthread` we get a bunch of

`Assertion failed: FD != kInvalidFile && "Invalid or inactive file

descriptor"` when testing check-clang.

Details:

- Previously, we used the LLVM_BUILD_TELEMETRY flag to control whether

any Telemetry code will be built. This has proven to cause more nuisance

to both users of the Telemetry and any further extension of it. (Eg., we

needed to put #ifdef around caller/user code)

- So the new approach is to:

+ Remove this flag and introduce LLVM_ENABLE_TELEMETRY which would be

true by default.

+ If LLVM_ENABLE_TELEMETRY is set to FALSE (at buildtime), the library

would still be built BUT Telemetry cannot be enabled. And no data can be

collected.

The benefit of this is that it simplifies user (and extension) code

since we just need to put the check on Config::EnableTelemetry. Besides,

the Telemetry library itself is very small, hence the additional code to

be built would not cause any difference in build performance.

---------

Co-authored-by: Pavel Labath <pavel@labath.sk>

Extract Flang's runtime library to use the LLVM_ENABLE_RUNTIME

mechanism. It will only become active when

`LLVM_ENABLE_RUNTIMES=flang-rt` is used, which also changes the

`FLANG_INCLUDE_RUNTIME` to `OFF` so the old runtime build rules do not

conflict. This also means that unless `LLVM_ENABLE_RUNTIMES=flang-rt` is

passed, nothing changes with the current build process.

Motivation:

* Consistency with LLVM's other runtime libraries (compiler-rt, libc,

libcxx, openmp offload, ...)

* Allows compiling the runtime for multiple targets at once using the

LLVM_RUNTIME_TARGETS configuration options

* Installs the runtime into the compiler's per-target resource directory

so it can be automatically found even when cross-compiling

Also see RFC discussion at

https://discourse.llvm.org/t/rfc-use-llvm-enable-runtimes-for-flangs-runtime/80826

Add a CMake flag (LLVM_BUILD_TELEMETRY) to disable building the

telemetry framework. The flag being enabled does *not* mean that

telemetry is being collected, it merely means we're building the generic

telemetry framework. Hence the flag is enabled by default.

Motivated by this Discourse thread:

https://discourse.llvm.org/t/how-to-disable-building-llvm-clang-telemetry/84305

This commit promotes the SPIR-V backend from experimental to official

status. As a result, SPIR-V will be built by default, simplifying

integration and increasing accessibility for downstream projects.

Discussion and RFC on Discourse:

https://discourse.llvm.org/t/rfc-promoting-spir-v-to-an-official-target/83614

Thanks to the effort of @RoseZhang03 and @aaryanshukla under the

guidance of

@michaelrj-google and @amykhuang, we now have newhdrgen and no longer

have a

dependency on TableGen and thus LLVM in order to start bootstrapping a

full

build.

This PR removes:

- LIBC_HDRGEN_EXE; the in tree newhdrgen is the only hdrgen that can be

used.

- LIBC_USE_NEW_HEADER_GEN; newhdrgen is the default and only option.

- LIBC_HDRGEN_ONLY; there is no need to have a distinct build step for

old

hdrgen.

- libc-api-test and libc-api-test-tidy build targets.

- Deletes all .td files.

It does not rename newhdrgen to just hdrgen. Will follow up with a

distinct PR

for that.

Link: #117209

Link: #117254Fixes: #117208

The situation that required symbol versions on the LLVM shared library

can also happen for clang-cpp, although it is less common: different

tools require different versions of the library, and through transitive

dependencies a process ends up with multiple copies of clang-cpp. This

causes havoc with ELF, because calls meant to go one version of the

library end up with another.

I've also considered introducing a symbol version globally, but for

example the clang (C) library and other targets outside of LLVM/Clang,

e.g. libc++, would not want that. So it's probably best if we keep it to

those libraries.

This reverts commit 944478dd62a78f6bb43d4da210643affcc4584b6.

Reverted because of following error on greendragon

ld: unknown options: --version-script

clang: error: linker command failed with exit code 1 (use -v to see invocation)

The situation that required symbol versions on the LLVM shared library

can also happen for clang-cpp, although it is less common: different

tools require different versions of the library, and through transitive

dependencies a process ends up with multiple copies of clang-cpp. This

causes havoc with ELF, because calls meant to go one version of the

library end up with another.

I've also considered introducing a symbol version globally, but for

example the clang (C) library and other targets outside of LLVM/Clang,

e.g. libc++, would not want that. So it's probably best if we keep it to

those libraries.

This is part of a series of patches that tries to improve DILocation bug

detection in Debugify. This first patch adds the necessary CMake flag to

LLVM and a variable defined by that flag to LLVM's config header, allowing

the next patch to track information without affecting normal builds.

This series of patches adds a "DebugLoc coverage tracking" feature, that

inserts conditionally-compiled tracking information into DebugLocs (and

by extension, to Instructions), which is used by Debugify to provide

more accurate and detailed coverage reports. When enabled, this features

tracks whether and why we have intentionally dropped a DebugLoc,

allowing Debugify to ignore false positives. An optional additional

feature allows also storing a stack trace of the point where a DebugLoc

was unintentionally dropped/not generated, which is used to make fixing

detected errors significantly easier. The goal of these features is to

provide useful tools for developers to fix existing DebugLoc errors and

allow reliable detection of regressions by either manual inspection or

an automated script.

Summary:

The `libc` project automatically adds `libc` to the projects list if

it's in the runtimes list. This then causes it to enter the projects

directory to bootstrap a handful of utilities, This usage conflicts

with a new error message with effectively stopped us from doing this.

This patch weakens the error message to permit this single case.

The documentation tells you not to do this:

https://llvm.org/docs/CMake.html#llvm-related-variables

But until now we did not enforce it.

```

$ cmake ../llvm-project/llvm/ -DCMAKE_BUILD_TYPE=Release -DLLVM_ENABLE_PROJECTS="pstl" -DLLVM_ENABLE_RUNTIMES="libcxx;pstl"

```

```

CMake Error at CMakeLists.txt:166 (message):

Runtime project "pstl" found in LLVM_ENABLE_PROJECTS and

LLVM_ENABLE_RUNTIMES. It must only appear in one of them and that one

should almost always be LLVM_ENABLE_RUNTIMES.

```

These are my initial build and code changes to supporting building llvm

as shared library/DLL on windows(without force exporting all symbols)

and making symbol visibility hidden by default on Linux which adding

explicit symbol visibility macros to the whole llvm codebase.

Updated cmake code to allow building llvm-shlib on windows by appending

/WHOLEARCHIVE:lib to the linker options.

Remove the hardcoded CMake error from using LLVM_BUILD_LLVM_DYLIB on

windows.

Updated CMake to define new macros to control conditional export macros

in llvm/Support/Compiler.h

Use /Zc:dllexportInlines- when compiling with clang-cl on windows with a

opt out CMake option to disable using it.

Replace some use of LLVM_EXTERNAL_VISIBILITY with new export macros.

Some of the cmake and code changes are based on @tstellar's earlier PR

https://github.com/llvm/llvm-project/pull/67502.

I have Windows building using clang-cl, while for MSVC its at-least able

to build libllvm, but some tests can't build because llvm iterator

template metaprogramming that doesn't work well with dllexport. Linux

should build without issue. My full branch is here

https://github.com/fsfod/llvm-project/tree/llvm-export-api-20.0 and

including all the auto generated export macros from clang tooling based

system.

ConstantFolding behaves differently depending on host's `HAS_IEE754_FLOAT128`.

LLVM should not change the behavior depending on host configurations.

This reverts commit 14c7e4a1844904f3db9b2dc93b722925a8c66b27.

(llvmorg-20-init-3262-g14c7e4a18449 and llvmorg-20-init-3498-g001e423ac626)

This is a reland of (#96287). This patch attempts to reduce the reverted

patch's clang compile time by removing #includes of float128.h and

inlining convertToQuad functions instead.

This is a reland of #96287. This change makes tests in logf128.ll ignore

the sign of NaNs for negative value tests and moves an #include <cmath>

to be blocked behind #ifndef _GLIBCXX_MATH_H.

Fix the builds with LLVM_TOOL_LLVM_DRIVER_BUILD enabled.

LLVM_ENABLE_EXPORTED_SYMBOLS_IN_EXECUTABLES is not completely

compatible with export_executable_symbols as the later will be ignored

if the previous is set to NO.

Fix the issue by passing if symbols need to be exported to

llvm_add_exectuable so the link flag can be determined directly

without calling export_executable_symbols_* later.

`LLVM_ENABLE_EXPORTED_SYMBOLS_IN_EXECUTABLES` is not completely

compatible with `export_executable_symbols` as the later will be ignored

if the previous is set to NO.

Fix the issue by passing if symbols need to be exported to

`llvm_add_exectuable` so the link flag can be determined directly

without calling `export_executable_symbols_*` later.

CMake -GXcode would otherwise offer to create one scheme for each

target, which ends up being a lot. For now, limit the default to the

`check-*` LIT targets, plus `ALL_BUILD` and `install`.

For targets that aren't in the default list, we now have a configuration

variable to promote an extra list of targets into schemes, for example

`-DLLVM_XCODE_EXTRA_TARGET_SCHEMES="TargetParserTests;SupportTests"` to

add schemes for `TargetParserTests` and `SupportTests` respectively.

The `llvm.spec.in` is turned into `llvm.spec` through cmake. The spec

file's `%build` section runs `./configure` which has been deprecated

since 2016 (See e49730d4baa8443ad56f59bd8066bf4c1e56ea72).

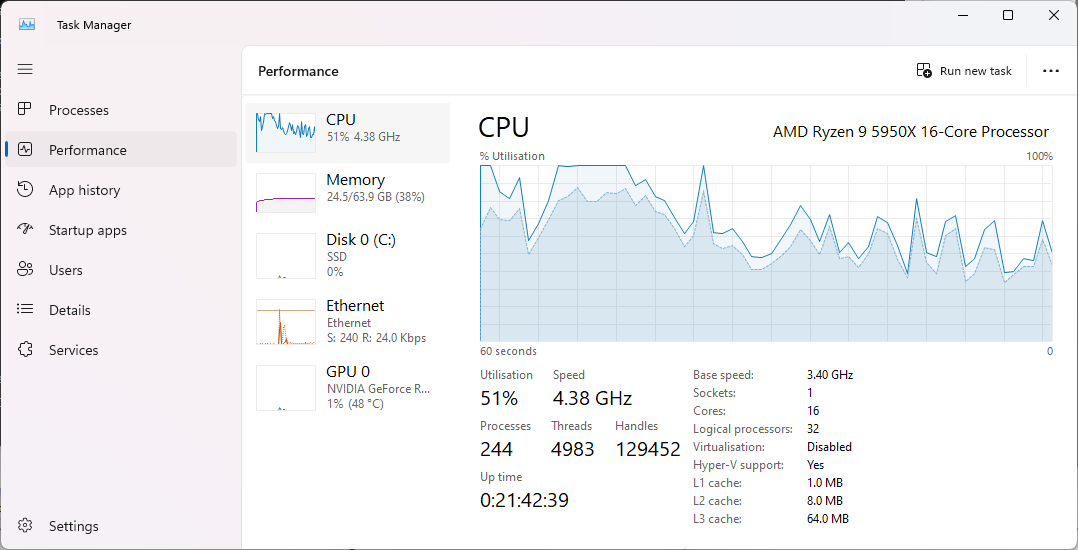

### Context

We have a longstanding performance issue on Windows, where to this day,

the default heap allocator is still lockfull. With the number of cores

increasing, building and using LLVM with the default Windows heap

allocator is sub-optimal. Notably, the ThinLTO link times with LLD are

extremely long, and increase proportionally with the number of cores in

the machine.

In

a6a37a2fcd,

I introduced the ability build LLVM with several popular lock-free

allocators. Downstream users however have to build their own toolchain

with this option, and building an optimal toolchain is a bit tedious and

long. Additionally, LLVM is now integrated into Visual Studio, which

AFAIK re-distributes the vanilla LLVM binaries/installer. The point

being that many users are impacted and might not be aware of this

problem, or are unable to build a more optimal version of the toolchain.

The symptom before this PR is that most of the CPU time goes to the

kernel (darker blue) when linking with ThinLTO:

With this PR, most time is spent in user space (light blue):

On higher core count machines, before this PR, the CPU usage becomes

pretty much flat because of contention:

<img width="549" alt="VM_176_windows_heap"

src="https://github.com/llvm/llvm-project/assets/37383324/f27d5800-ee02-496d-a4e7-88177e0727f0">

With this PR, similarily most CPU time is now used:

<img width="549" alt="VM_176_with_rpmalloc"

src="https://github.com/llvm/llvm-project/assets/37383324/7d4785dd-94a7-4f06-9b16-aaa4e2e505c8">

### Changes in this PR

The avenue I've taken here is to vendor/re-licence rpmalloc in-tree, and

use it when building the Windows 64-bit release. Given the permissive

rpmalloc licence, prior discussions with the LLVM foundation and

@lattner suggested this vendoring. Rpmalloc's author (@mjansson) kindly

agreed to ~~donate~~ re-licence the rpmalloc code in LLVM (please do

correct me if I misinterpreted our past communications).

I've chosen rpmalloc because it's small and gives the best value

overall. The source code is only 4 .c files. Rpmalloc is statically

replacing the weak CRT alloc symbols at link time, and has no dynamic

patching like mimalloc. As an alternative, there were several

unsuccessfull attempts made by Russell Gallop to use SCUDO in the past,

please see thread in https://reviews.llvm.org/D86694. If later someone

comes up with a PR of similar performance that uses SCUDO, we could then

delete this vendored rpmalloc folder.

I've added a new cmake flag `LLVM_ENABLE_RPMALLOC` which essentialy sets

`LLVM_INTEGRATED_CRT_ALLOC` to the in-tree rpmalloc source.

### Performance

The most obvious test is profling a ThinLTO linking step with LLD. I've

used a Clang compilation as a testbed, ie.

```

set OPTS=/GS- /D_ITERATOR_DEBUG_LEVEL=0 -Xclang -O3 -fstrict-aliasing -march=native -flto=thin -fwhole-program-vtables -fuse-ld=lld

cmake -G Ninja %ROOT%/llvm -DCMAKE_BUILD_TYPE=Release -DLLVM_ENABLE_ASSERTIONS=TRUE -DLLVM_ENABLE_PROJECTS="clang" -DLLVM_ENABLE_PDB=ON -DLLVM_OPTIMIZED_TABLEGEN=ON -DCMAKE_C_COMPILER=clang-cl.exe -DCMAKE_CXX_COMPILER=clang-cl.exe -DCMAKE_LINKER=lld-link.exe -DLLVM_ENABLE_LLD=ON -DCMAKE_CXX_FLAGS="%OPTS%" -DCMAKE_C_FLAGS="%OPTS%" -DLLVM_ENABLE_LTO=THIN

```

I've profiled the linking step with no LTO cache, with Powershell, such

as:

```

Measure-Command { lld-link /nologo @CMakeFiles\clang.rsp /out:bin\clang.exe /implib:lib\clang.lib /pdb:bin\clang.pdb /version:0.0 /machine:x64 /STACK:10000000 /DEBUG /OPT:REF /OPT:ICF /INCREMENTAL:NO /subsystem:console /MANIFEST:EMBED,ID=1 }`

```

Timings:

| Machine | Allocator | Time to link |

|--------|--------|--------|

| 16c/32t AMD Ryzen 9 5950X | Windows Heap | 10 min 38 sec |

| | **Rpmalloc** | **4 min 11 sec** |

| 32c/64t AMD Ryzen Threadripper PRO 3975WX | Windows Heap | 23 min 29

sec |

| | **Rpmalloc** | **2 min 11 sec** |

| | **Rpmalloc + /threads:64** | **1 min 50 sec** |

| 176 vCPU (2 socket) Intel Xeon Platinium 8481C (fixed clock 2.7 GHz) |

Windows Heap | 43 min 40 sec |

| | **Rpmalloc** | **1 min 45 sec** |

This also improves the overall performance when building with clang-cl.

I've profiled a regular compilation of clang itself, ie:

```

set OPTS=/GS- /D_ITERATOR_DEBUG_LEVEL=0 /arch:AVX -fuse-ld=lld

cmake -G Ninja %ROOT%/llvm -DCMAKE_BUILD_TYPE=Release -DLLVM_ENABLE_ASSERTIONS=TRUE -DLLVM_ENABLE_PROJECTS="clang;lld" -DLLVM_ENABLE_PDB=ON -DLLVM_OPTIMIZED_TABLEGEN=ON -DCMAKE_C_COMPILER=clang-cl.exe -DCMAKE_CXX_COMPILER=clang-cl.exe -DCMAKE_LINKER=lld-link.exe -DLLVM_ENABLE_LLD=ON -DCMAKE_CXX_FLAGS="%OPTS%" -DCMAKE_C_FLAGS="%OPTS%"

```

This saves approx. 30 sec when building on the Threadripper PRO 3975WX:

```

(default Windows Heap)

C:\src\git\llvm-project>hyperfine -r 5 -p "make_llvm.bat stage1_test2" "ninja clang -C stage1_test2"

Benchmark 1: ninja clang -C stage1_test2

Time (mean ± σ): 392.716 s ± 3.830 s [User: 17734.025 s, System: 1078.674 s]

Range (min … max): 390.127 s … 399.449 s 5 runs

(rpmalloc)

C:\src\git\llvm-project>hyperfine -r 5 -p "make_llvm.bat stage1_test2" "ninja clang -C stage1_test2"

Benchmark 1: ninja clang -C stage1_test2

Time (mean ± σ): 360.824 s ± 1.162 s [User: 15148.637 s, System: 905.175 s]

Range (min … max): 359.208 s … 362.288 s 5 runs

```

This reverts commit b6824c9d459da059e247a60c1ebd1aeb580dacc2.

This relands commit 0a6c74e21cc6750c843310ab35b47763cddaaf32.

The original commit was reverted due to buildbot failures. These bots

should be updated now, so hopefully this will stick.