This is a reland of #96287. This change makes tests in logf128.ll ignore

the sign of NaNs for negative value tests and moves an #include <cmath>

to be blocked behind #ifndef _GLIBCXX_MATH_H.

In order to guarantee that extracting 64 bits doesn't require more than

2 words, the word size would need to be 64 bits or more. If the word

size was smaller than 64, like 32, you may need to read 3 words to get

64 bits.

https://reviews.llvm.org/D70447 switched to `ManagedStatic` to work

around race conditions in MSVC runtimes and the MinGW runtime.

However, `ManagedStatic` is not suitable for other platforms.

However, this workaround is not suitable for other platforms (#66974).

lld::fatal calls exitLld(1), which calls `llvm_shutdown` to destroy

`ManagedStatic` objects. The worker threads will finish and invoke TLS

destructors (glibc `__call_tls_dtors`). This can lead to race conditions

if other threads attempt to access TLS objects that have already been

destroyed.

While lld's early exit mechanism needs more work, I believe Parallel.cpp

should avoid this pitfall as well.

Pull Request: https://github.com/llvm/llvm-project/pull/102989

since C23 this macro is defined by float.h, which clang implements in

it's float.h since #96659 landed.

However, regcomp.c in LLVMSupport happened to define it's own macro with

that name, leading to problems when bootstrapping. This change renames

the offending macro.

It's barely testable - the test does exercise the code, but wouldn't

fail on an empty implementation. It would cause a memory leak though

(because the error handle wouldn't be unwrapped/reowned) which could be

detected by asan and other leak detectors.

Since functions exported from DLLs are type-erased, before this patch I

was seeing the new Clang 19 warning `-Wcast-function-type-mismatch`.

This happens when building LLVM on Windows.

Following discussion in

593f708118 (commitcomment-143905744)

This PR adds `f8E4M3` type to APFloat.

`f8E3M4` type follows IEEE 754 convention

```c

f8E3M4 (IEEE 754)

- Exponent bias: 3

- Maximum stored exponent value: 6 (binary 110)

- Maximum unbiased exponent value: 6 - 3 = 3

- Minimum stored exponent value: 1 (binary 001)

- Minimum unbiased exponent value: 1 − 3 = −2

- Precision specifies the total number of bits used for the significand (mantissa),

including implicit leading integer bit = 4 + 1 = 5

- Follows IEEE 754 conventions for representation of special values

- Has Positive and Negative zero

- Has Positive and Negative infinity

- Has NaNs

Additional details:

- Max exp (unbiased): 3

- Min exp (unbiased): -2

- Infinities (+/-): S.111.0000

- Zeros (+/-): S.000.0000

- NaNs: S.111.{0,1}⁴ except S.111.0000

- Max normal number: S.110.1111 = +/-2^(6-3) x (1 + 15/16) = +/-2^3 x 31 x 2^(-4) = +/-15.5

- Min normal number: S.001.0000 = +/-2^(1-3) x (1 + 0) = +/-2^(-2)

- Max subnormal number: S.000.1111 = +/-2^(-2) x 15/16 = +/-2^(-2) x 15 x 2^(-4) = +/-15 x 2^(-6)

- Min subnormal number: S.000.0001 = +/-2^(-2) x 1/16 = +/-2^(-2) x 2^(-4) = +/-2^(-6)

```

Related PRs:

- [PR-97179](https://github.com/llvm/llvm-project/pull/97179) [APFloat]

Add support for f8E4M3 IEEE 754 type

Compared to the generic scalar code, using Arm NEON instructions yields

a ~11x speedup: 31 vs 339.5 ms to hash 1 GiB of random data on the Apple

M1.

This follows the upstream implementation closely, with some

simplifications made:

- Removed workarounds for suboptimal codegen on older GCC

- Removed instruction reordering barriers which seem to have a

negligible impact according to my measurements

- We do not support WebAssembly's mostly NEON-compatible API

- There is no configurable mixing of SIMD and scalar code; according to

the upstream comments, this is only relevant for smaller Cortex cores

which can dispatch relatively few NEON micro-ops per cycle.

This commit intends to use only standard ACLE intrinsics and datatypes,

so it should build with all supported versions of GCC, Clang and MSVC.

This feature is enabled by default when targeting AArch64, but the

`LLVM_XXH_USE_NEON=0` macro can be set to explicitly disable it.

XXH3 is used for ICF, string deduplication and computing the UUID in

ld64.lld; this commit results in a -1.77% +/- 0.59% speed improvement

for a `--threads=8` link of Chromium.framework.

This PR implements `raw_socket_stream::read`, which overloads the base

class `raw_fd_stream::read`. `raw_socket_stream::read` provides a way to

timeout the underlying `::read`. The timeout functionality was not added

to `raw_fd_stream::read` to avoid needlessly increasing compile times

and allow for convenient code reuse with `raw_socket_stream::accept`,

which also requires timeout functionality. This PR supports the module

build daemon and will help guarantee it never becomes a zombie process.

This PR adds `f8E4M3` type to APFloat.

`f8E4M3` type follows IEEE 754 convention

```c

f8E4M3 (IEEE 754)

- Exponent bias: 7

- Maximum stored exponent value: 14 (binary 1110)

- Maximum unbiased exponent value: 14 - 7 = 7

- Minimum stored exponent value: 1 (binary 0001)

- Minimum unbiased exponent value: 1 − 7 = −6

- Precision specifies the total number of bits used for the significand (mantisa),

including implicit leading integer bit = 3 + 1 = 4

- Follows IEEE 754 conventions for representation of special values

- Has Positive and Negative zero

- Has Positive and Negative infinity

- Has NaNs

Additional details:

- Max exp (unbiased): 7

- Min exp (unbiased): -6

- Infinities (+/-): S.1111.000

- Zeros (+/-): S.0000.000

- NaNs: S.1111.{001, 010, 011, 100, 101, 110, 111}

- Max normal number: S.1110.111 = +/-2^(7) x (1 + 0.875) = +/-240

- Min normal number: S.0001.000 = +/-2^(-6)

- Max subnormal number: S.0000.111 = +/-2^(-6) x 0.875 = +/-2^(-9) x 7

- Min subnormal number: S.0000.001 = +/-2^(-6) x 0.125 = +/-2^(-9)

```

Related PRs:

- [PR-97118](https://github.com/llvm/llvm-project/pull/97118) Add f8E4M3

IEEE 754 type to mlir

The removal of StringRef::equals in

3fa409f2318ef790cc44836afe9a72830715ad84 broke the

[Solaris/sparcv9](https://lab.llvm.org/buildbot/#/builders/13/builds/724)

and

[Solaris/amd64](https://lab.llvm.org/staging/#/builders/94/builds/5176)

buildbots:

```

In file included from /vol/llvm/src/llvm-project/git/llvm/lib/Support/Path.cpp:1200:

/vol/llvm/src/llvm-project/git/llvm/lib/Support/Unix/Path.inc:519:18: error: no member named 'equals' in 'llvm::StringRef'

519 | return !fstype.equals("nfs");

| ~~~~~~ ^

```

Fixed by switching to `operator!=` instead.

Tested on sparcv9-sun-solaris2.11 and amd64-pc-solaris2.11.

Reuse the APInt::BitWidth to eliminate DynamicAPInt::HoldsLarge, cutting

the size of DynamicAPInt by four bytes. This is implemented by making

DynamicAPInt a friend of SlowDynamicAPInt and APInt, so it can directly

access SlowDynamicAPInt::Val and APInt::BitWidth.

We get a speedup of 4% with this patch.

This PR lifts the body of IEEEFloat::toString out to a standalone

function. We do this to facilitate code sharing with other floating

point types, e.g., the forthcoming support for HexFloat.

There is no change in functionality.

Since `raw_string_ostream` doesn't own the string buffer, it is

desirable (in terms of memory safety) for users to directly reference

the string buffer rather than use `raw_string_ostream::str()`.

Work towards TODO comment to remove `raw_string_ostream::str()`.

Originally, tie was introduced by D81156 to flush stdout before writing

to stderr. 030897523 reverted this due to race conditions. Nonetheless,

it does cost performance, causing an extra check in the "cold" path,

which is actually the hot path for raw_svector_ostream. Given that this

feature is only used for errs(), move it to raw_fd_ostream so that it no

longer affects performance of other stream classes.

Currently `f8E4M3` is mapped to `Float8E4M3FNType`.

This PR renames `f8E4M3` to `f8E4M3FN` to accurately reflect the actual

type.

This PR is needed to avoid names conflict in upcoming PR which will add

IEEE 754 `Float8E4M3Type`.

https://github.com/llvm/llvm-project/pull/97118 Add f8E4M3 IEEE 754 type

Maksim, can you review this PR? @makslevental ?

Hashing.h provides hash_value/hash_combine/hash_combine_range, which are

primarily used by `DenseMap<StringRef, X>`

Users shouldn't rely on specific hash values due to size_t differences

on 32-bit/64-bit platforms and potential algorithm changes.

`set_fixed_execution_hash_seed` is provided but it has never been used.

In LLVM_ENABLE_ABI_BREAKING_CHECKS builds, take the the address of a

static storage duration variable as the seed like

absl/hash/internal/hash.h `kSeed`. (See https://reviews.llvm.org/D93931

for workaround for older Clang. Mach-O x86-64 forces PIC, so absl's

`__apple_build_version__` check is unnecessary.)

LLVM_ENABLE_ABI_BREAKING_CHECKS defaults to `WITH_ASSERTS` and is

enabled in an assertion build.

In a non-assertion build, `get_execution_seed` returns the fixed value

regardless of `NDEBUG`. Removing a variable load yields noticeable

size/performance improvement.

A few users relying on the iteration order of `DenseMap<StringRef, X>`

have been fixed (e.g., f8f4235612b9 c025bd1fdbbd 89e8e63f47ff

86eb6bf6715c eb8d03656549 0ea6b8e476c2 58d7a6e0e636 8ea31db27211

592abf29f9f7 664497557ae7).

From my experience fixing [`StringMap`](https://discourse.llvm.org/t/reverse-iteration-bots/72224)

iteration order issues, the scale of issues is similar.

Pull Request: https://github.com/llvm/llvm-project/pull/96282

`__emulu` is used without including `intrin.h`. Actually, it's better to

rely on compiler optimizations. In this LLVM copy, we try to eliminate

unneceeded workarounds for old compilers.

Pull Request: https://github.com/llvm/llvm-project/pull/96931

`SmallVector` has a special case to allow vector of char to exceed 4 GB

in

size on 64-bit hosts. Apply this special case only for 8-bit element

types, instead of all element types < 32 bits.

This makes `SmallVector<MCPhysReg>` more compact because `MCPhysReg` is

`uint16_t`.

---------

Co-authored-by: Nikita Popov <github@npopov.com>

LLVM's build system does the right thing but LLVM_SYSTEM_LIBS ends up

containing the shared library. Emit the static library instead when

appropriate.

With LLVM_USE_STATIC_ZSTD, before:

khuey@zhadum:~/dev/llvm-project/build$ ./bin/llvm-config --system-libs

-lrt -ldl -lm -lz -lzstd -lxml2

after:

khuey@zhadum:~/dev/llvm-project/build$ ./bin/llvm-config --system-libs

-lrt -ldl -lm -lz /usr/local/lib/libzstd.a -lxml2

The two variables using ManagedStatic that are exported by this

header are not actually used anywhere -- they are used through

SubCommand::getTopLevel() and SubCommand::getAll() instead.

Drop the extern declarations and the include.

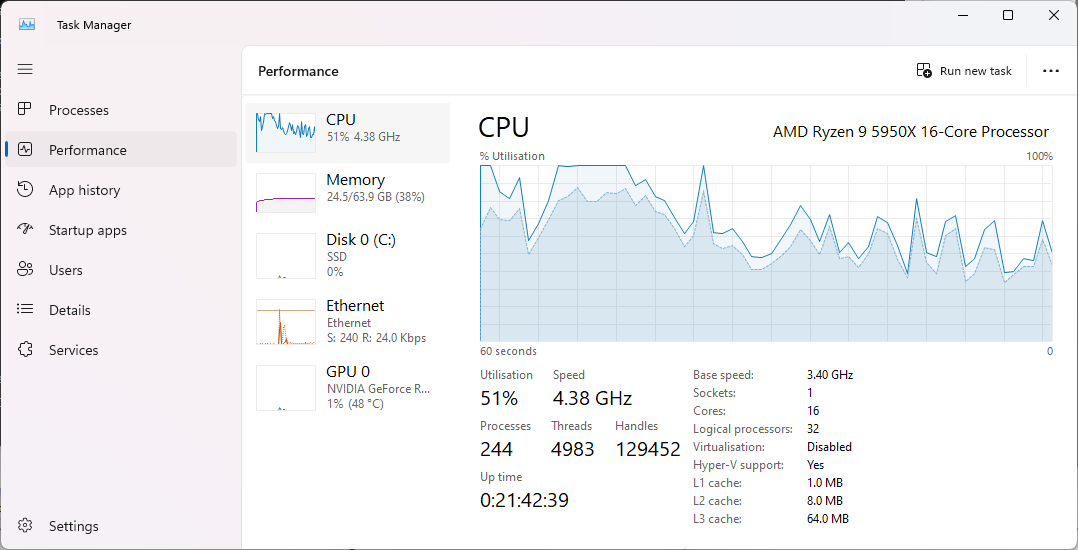

### Context

We have a longstanding performance issue on Windows, where to this day,

the default heap allocator is still lockfull. With the number of cores

increasing, building and using LLVM with the default Windows heap

allocator is sub-optimal. Notably, the ThinLTO link times with LLD are

extremely long, and increase proportionally with the number of cores in

the machine.

In

a6a37a2fcd,

I introduced the ability build LLVM with several popular lock-free

allocators. Downstream users however have to build their own toolchain

with this option, and building an optimal toolchain is a bit tedious and

long. Additionally, LLVM is now integrated into Visual Studio, which

AFAIK re-distributes the vanilla LLVM binaries/installer. The point

being that many users are impacted and might not be aware of this

problem, or are unable to build a more optimal version of the toolchain.

The symptom before this PR is that most of the CPU time goes to the

kernel (darker blue) when linking with ThinLTO:

With this PR, most time is spent in user space (light blue):

On higher core count machines, before this PR, the CPU usage becomes

pretty much flat because of contention:

<img width="549" alt="VM_176_windows_heap"

src="https://github.com/llvm/llvm-project/assets/37383324/f27d5800-ee02-496d-a4e7-88177e0727f0">

With this PR, similarily most CPU time is now used:

<img width="549" alt="VM_176_with_rpmalloc"

src="https://github.com/llvm/llvm-project/assets/37383324/7d4785dd-94a7-4f06-9b16-aaa4e2e505c8">

### Changes in this PR

The avenue I've taken here is to vendor/re-licence rpmalloc in-tree, and

use it when building the Windows 64-bit release. Given the permissive

rpmalloc licence, prior discussions with the LLVM foundation and

@lattner suggested this vendoring. Rpmalloc's author (@mjansson) kindly

agreed to ~~donate~~ re-licence the rpmalloc code in LLVM (please do

correct me if I misinterpreted our past communications).

I've chosen rpmalloc because it's small and gives the best value

overall. The source code is only 4 .c files. Rpmalloc is statically

replacing the weak CRT alloc symbols at link time, and has no dynamic

patching like mimalloc. As an alternative, there were several

unsuccessfull attempts made by Russell Gallop to use SCUDO in the past,

please see thread in https://reviews.llvm.org/D86694. If later someone

comes up with a PR of similar performance that uses SCUDO, we could then

delete this vendored rpmalloc folder.

I've added a new cmake flag `LLVM_ENABLE_RPMALLOC` which essentialy sets

`LLVM_INTEGRATED_CRT_ALLOC` to the in-tree rpmalloc source.

### Performance

The most obvious test is profling a ThinLTO linking step with LLD. I've

used a Clang compilation as a testbed, ie.

```

set OPTS=/GS- /D_ITERATOR_DEBUG_LEVEL=0 -Xclang -O3 -fstrict-aliasing -march=native -flto=thin -fwhole-program-vtables -fuse-ld=lld

cmake -G Ninja %ROOT%/llvm -DCMAKE_BUILD_TYPE=Release -DLLVM_ENABLE_ASSERTIONS=TRUE -DLLVM_ENABLE_PROJECTS="clang" -DLLVM_ENABLE_PDB=ON -DLLVM_OPTIMIZED_TABLEGEN=ON -DCMAKE_C_COMPILER=clang-cl.exe -DCMAKE_CXX_COMPILER=clang-cl.exe -DCMAKE_LINKER=lld-link.exe -DLLVM_ENABLE_LLD=ON -DCMAKE_CXX_FLAGS="%OPTS%" -DCMAKE_C_FLAGS="%OPTS%" -DLLVM_ENABLE_LTO=THIN

```

I've profiled the linking step with no LTO cache, with Powershell, such

as:

```

Measure-Command { lld-link /nologo @CMakeFiles\clang.rsp /out:bin\clang.exe /implib:lib\clang.lib /pdb:bin\clang.pdb /version:0.0 /machine:x64 /STACK:10000000 /DEBUG /OPT:REF /OPT:ICF /INCREMENTAL:NO /subsystem:console /MANIFEST:EMBED,ID=1 }`

```

Timings:

| Machine | Allocator | Time to link |

|--------|--------|--------|

| 16c/32t AMD Ryzen 9 5950X | Windows Heap | 10 min 38 sec |

| | **Rpmalloc** | **4 min 11 sec** |

| 32c/64t AMD Ryzen Threadripper PRO 3975WX | Windows Heap | 23 min 29

sec |

| | **Rpmalloc** | **2 min 11 sec** |

| | **Rpmalloc + /threads:64** | **1 min 50 sec** |

| 176 vCPU (2 socket) Intel Xeon Platinium 8481C (fixed clock 2.7 GHz) |

Windows Heap | 43 min 40 sec |

| | **Rpmalloc** | **1 min 45 sec** |

This also improves the overall performance when building with clang-cl.

I've profiled a regular compilation of clang itself, ie:

```

set OPTS=/GS- /D_ITERATOR_DEBUG_LEVEL=0 /arch:AVX -fuse-ld=lld

cmake -G Ninja %ROOT%/llvm -DCMAKE_BUILD_TYPE=Release -DLLVM_ENABLE_ASSERTIONS=TRUE -DLLVM_ENABLE_PROJECTS="clang;lld" -DLLVM_ENABLE_PDB=ON -DLLVM_OPTIMIZED_TABLEGEN=ON -DCMAKE_C_COMPILER=clang-cl.exe -DCMAKE_CXX_COMPILER=clang-cl.exe -DCMAKE_LINKER=lld-link.exe -DLLVM_ENABLE_LLD=ON -DCMAKE_CXX_FLAGS="%OPTS%" -DCMAKE_C_FLAGS="%OPTS%"

```

This saves approx. 30 sec when building on the Threadripper PRO 3975WX:

```

(default Windows Heap)

C:\src\git\llvm-project>hyperfine -r 5 -p "make_llvm.bat stage1_test2" "ninja clang -C stage1_test2"

Benchmark 1: ninja clang -C stage1_test2

Time (mean ± σ): 392.716 s ± 3.830 s [User: 17734.025 s, System: 1078.674 s]

Range (min … max): 390.127 s … 399.449 s 5 runs

(rpmalloc)

C:\src\git\llvm-project>hyperfine -r 5 -p "make_llvm.bat stage1_test2" "ninja clang -C stage1_test2"

Benchmark 1: ninja clang -C stage1_test2

Time (mean ± σ): 360.824 s ± 1.162 s [User: 15148.637 s, System: 905.175 s]

Range (min … max): 359.208 s … 362.288 s 5 runs

```

Add a 128-bit xxhash function, following the existing

`llvm::xxh3_64bits` and `llvm::xxHash` implementations. Previously,

48e93f57f1ee914ca29aa31bf2ccd916565a3610 added support for

`llvm::xxh3_64bits`, which closely follows the upstream implementation

at https://github.com/Cyan4973/xxHash, with simplifications from Devin

Hussey's xxhash-clean.

However, it is desirable to have a larger 128-bit hash key for use cases

such as filesystem checksums where chance of collision needs to be

negligible.

So to that end this also ports over the 128-bit xxh3_128bits as

`llvm::xxh3_128bits`.

Testing:

- Add a test based on xsum_sanity_check.c in upstream xxhash.

This PR depends on https://github.com/llvm/llvm-project/pull/90264

In the current implementation, only leaf children of each internal node

in the suffix tree are included as candidates for outlining. But all

leaf descendants are outlining candidates, which we include in the new

implementation. This is enabled on a flag `outliner-leaf-descendants`

which is default to be true.

The reason for _enabling this on a flag_ is because machine outliner is

not the only pass that uses suffix tree.

The reason for _having this default to be true_ is because including all

leaf descendants show consistent size win.

* For Clang/LLD, it shows around 3% reduction in text segment size when

compared to the baseline `-Oz` linker binary.

* For selected benchmark tests in LLVM test suite

| run (CTMark/) | only leaf children | all leaf descendants | reduction

% |

|------------------|--------------------|----------------------|-------------|

| lencod | 349624 | 348564 | -0.2004% |

| SPASS | 219672 | 218440 | -0.4738% |

| kc | 271956 | 250068 | -0.4506% |

| sqlite3 | 223920 | 222484 | -0.5471% |

| 7zip-benchmark | 405364 | 401244 | -0.3428% |

| bullet | 139820 | 138340 | -0.8315% |

| consumer-typeset | 295684 | 286628 | -1.2295% |

| pairlocalalign | 72236 | 71936 | -0.2164% |

| tramp3d-v4 | 189572 | 183676 | -2.9668% |

This is part of an enhanced version of machine outliner -- see

[RFC](https://discourse.llvm.org/t/rfc-enhanced-machine-outliner-part-1-fulllto-part-2-thinlto-nolto-to-come/78732).