mirror of

https://github.com/llvm/llvm-project.git

synced 2025-04-27 17:06:05 +00:00

### Context

We have a longstanding performance issue on Windows, where to this day,

the default heap allocator is still lockfull. With the number of cores

increasing, building and using LLVM with the default Windows heap

allocator is sub-optimal. Notably, the ThinLTO link times with LLD are

extremely long, and increase proportionally with the number of cores in

the machine.

In

a6a37a2fcd,

I introduced the ability build LLVM with several popular lock-free

allocators. Downstream users however have to build their own toolchain

with this option, and building an optimal toolchain is a bit tedious and

long. Additionally, LLVM is now integrated into Visual Studio, which

AFAIK re-distributes the vanilla LLVM binaries/installer. The point

being that many users are impacted and might not be aware of this

problem, or are unable to build a more optimal version of the toolchain.

The symptom before this PR is that most of the CPU time goes to the

kernel (darker blue) when linking with ThinLTO:

With this PR, most time is spent in user space (light blue):

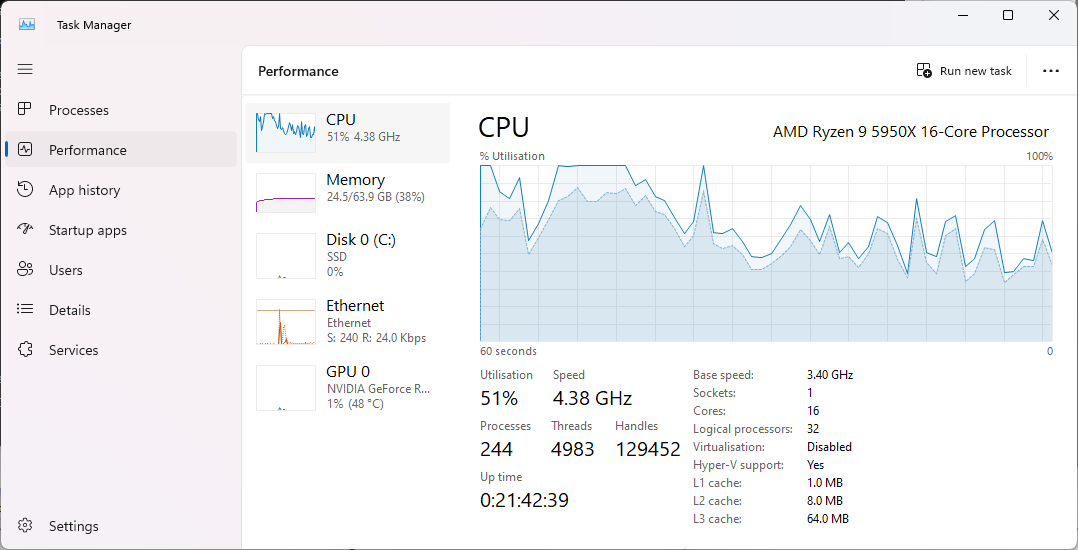

On higher core count machines, before this PR, the CPU usage becomes

pretty much flat because of contention:

<img width="549" alt="VM_176_windows_heap"

src="https://github.com/llvm/llvm-project/assets/37383324/f27d5800-ee02-496d-a4e7-88177e0727f0">

With this PR, similarily most CPU time is now used:

<img width="549" alt="VM_176_with_rpmalloc"

src="https://github.com/llvm/llvm-project/assets/37383324/7d4785dd-94a7-4f06-9b16-aaa4e2e505c8">

### Changes in this PR

The avenue I've taken here is to vendor/re-licence rpmalloc in-tree, and

use it when building the Windows 64-bit release. Given the permissive

rpmalloc licence, prior discussions with the LLVM foundation and

@lattner suggested this vendoring. Rpmalloc's author (@mjansson) kindly

agreed to ~~donate~~ re-licence the rpmalloc code in LLVM (please do

correct me if I misinterpreted our past communications).

I've chosen rpmalloc because it's small and gives the best value

overall. The source code is only 4 .c files. Rpmalloc is statically

replacing the weak CRT alloc symbols at link time, and has no dynamic

patching like mimalloc. As an alternative, there were several

unsuccessfull attempts made by Russell Gallop to use SCUDO in the past,

please see thread in https://reviews.llvm.org/D86694. If later someone

comes up with a PR of similar performance that uses SCUDO, we could then

delete this vendored rpmalloc folder.

I've added a new cmake flag `LLVM_ENABLE_RPMALLOC` which essentialy sets

`LLVM_INTEGRATED_CRT_ALLOC` to the in-tree rpmalloc source.

### Performance

The most obvious test is profling a ThinLTO linking step with LLD. I've

used a Clang compilation as a testbed, ie.

```

set OPTS=/GS- /D_ITERATOR_DEBUG_LEVEL=0 -Xclang -O3 -fstrict-aliasing -march=native -flto=thin -fwhole-program-vtables -fuse-ld=lld

cmake -G Ninja %ROOT%/llvm -DCMAKE_BUILD_TYPE=Release -DLLVM_ENABLE_ASSERTIONS=TRUE -DLLVM_ENABLE_PROJECTS="clang" -DLLVM_ENABLE_PDB=ON -DLLVM_OPTIMIZED_TABLEGEN=ON -DCMAKE_C_COMPILER=clang-cl.exe -DCMAKE_CXX_COMPILER=clang-cl.exe -DCMAKE_LINKER=lld-link.exe -DLLVM_ENABLE_LLD=ON -DCMAKE_CXX_FLAGS="%OPTS%" -DCMAKE_C_FLAGS="%OPTS%" -DLLVM_ENABLE_LTO=THIN

```

I've profiled the linking step with no LTO cache, with Powershell, such

as:

```

Measure-Command { lld-link /nologo @CMakeFiles\clang.rsp /out:bin\clang.exe /implib:lib\clang.lib /pdb:bin\clang.pdb /version:0.0 /machine:x64 /STACK:10000000 /DEBUG /OPT:REF /OPT:ICF /INCREMENTAL:NO /subsystem:console /MANIFEST:EMBED,ID=1 }`

```

Timings:

| Machine | Allocator | Time to link |

|--------|--------|--------|

| 16c/32t AMD Ryzen 9 5950X | Windows Heap | 10 min 38 sec |

| | **Rpmalloc** | **4 min 11 sec** |

| 32c/64t AMD Ryzen Threadripper PRO 3975WX | Windows Heap | 23 min 29

sec |

| | **Rpmalloc** | **2 min 11 sec** |

| | **Rpmalloc + /threads:64** | **1 min 50 sec** |

| 176 vCPU (2 socket) Intel Xeon Platinium 8481C (fixed clock 2.7 GHz) |

Windows Heap | 43 min 40 sec |

| | **Rpmalloc** | **1 min 45 sec** |

This also improves the overall performance when building with clang-cl.

I've profiled a regular compilation of clang itself, ie:

```

set OPTS=/GS- /D_ITERATOR_DEBUG_LEVEL=0 /arch:AVX -fuse-ld=lld

cmake -G Ninja %ROOT%/llvm -DCMAKE_BUILD_TYPE=Release -DLLVM_ENABLE_ASSERTIONS=TRUE -DLLVM_ENABLE_PROJECTS="clang;lld" -DLLVM_ENABLE_PDB=ON -DLLVM_OPTIMIZED_TABLEGEN=ON -DCMAKE_C_COMPILER=clang-cl.exe -DCMAKE_CXX_COMPILER=clang-cl.exe -DCMAKE_LINKER=lld-link.exe -DLLVM_ENABLE_LLD=ON -DCMAKE_CXX_FLAGS="%OPTS%" -DCMAKE_C_FLAGS="%OPTS%"

```

This saves approx. 30 sec when building on the Threadripper PRO 3975WX:

```

(default Windows Heap)

C:\src\git\llvm-project>hyperfine -r 5 -p "make_llvm.bat stage1_test2" "ninja clang -C stage1_test2"

Benchmark 1: ninja clang -C stage1_test2

Time (mean ± σ): 392.716 s ± 3.830 s [User: 17734.025 s, System: 1078.674 s]

Range (min … max): 390.127 s … 399.449 s 5 runs

(rpmalloc)

C:\src\git\llvm-project>hyperfine -r 5 -p "make_llvm.bat stage1_test2" "ninja clang -C stage1_test2"

Benchmark 1: ninja clang -C stage1_test2

Time (mean ± σ): 360.824 s ± 1.162 s [User: 15148.637 s, System: 905.175 s]

Range (min … max): 359.208 s … 362.288 s 5 runs

```

3993 lines

144 KiB

C

3993 lines

144 KiB

C

//===---------------------- rpmalloc.c ------------------*- C -*-=============//

|

|

//

|

|

// Part of the LLVM Project, under the Apache License v2.0 with LLVM Exceptions.

|

|

// See https://llvm.org/LICENSE.txt for license information.

|

|

// SPDX-License-Identifier: Apache-2.0 WITH LLVM-exception

|

|

//

|

|

//===----------------------------------------------------------------------===//

|

|

//

|

|

// This library provides a cross-platform lock free thread caching malloc

|

|

// implementation in C11.

|

|

//

|

|

//===----------------------------------------------------------------------===//

|

|

|

|

#include "rpmalloc.h"

|

|

|

|

////////////

|

|

///

|

|

/// Build time configurable limits

|

|

///

|

|

//////

|

|

|

|

#if defined(__clang__)

|

|

#pragma clang diagnostic ignored "-Wunused-macros"

|

|

#pragma clang diagnostic ignored "-Wunused-function"

|

|

#if __has_warning("-Wreserved-identifier")

|

|

#pragma clang diagnostic ignored "-Wreserved-identifier"

|

|

#endif

|

|

#if __has_warning("-Wstatic-in-inline")

|

|

#pragma clang diagnostic ignored "-Wstatic-in-inline"

|

|

#endif

|

|

#elif defined(__GNUC__)

|

|

#pragma GCC diagnostic ignored "-Wunused-macros"

|

|

#pragma GCC diagnostic ignored "-Wunused-function"

|

|

#endif

|

|

|

|

#if !defined(__has_builtin)

|

|

#define __has_builtin(b) 0

|

|

#endif

|

|

|

|

#if defined(__GNUC__) || defined(__clang__)

|

|

|

|

#if __has_builtin(__builtin_memcpy_inline)

|

|

#define _rpmalloc_memcpy_const(x, y, s) __builtin_memcpy_inline(x, y, s)

|

|

#else

|

|

#define _rpmalloc_memcpy_const(x, y, s) \

|

|

do { \

|

|

_Static_assert(__builtin_choose_expr(__builtin_constant_p(s), 1, 0), \

|

|

"len must be a constant integer"); \

|

|

memcpy(x, y, s); \

|

|

} while (0)

|

|

#endif

|

|

|

|

#if __has_builtin(__builtin_memset_inline)

|

|

#define _rpmalloc_memset_const(x, y, s) __builtin_memset_inline(x, y, s)

|

|

#else

|

|

#define _rpmalloc_memset_const(x, y, s) \

|

|

do { \

|

|

_Static_assert(__builtin_choose_expr(__builtin_constant_p(s), 1, 0), \

|

|

"len must be a constant integer"); \

|

|

memset(x, y, s); \

|

|

} while (0)

|

|

#endif

|

|

#else

|

|

#define _rpmalloc_memcpy_const(x, y, s) memcpy(x, y, s)

|

|

#define _rpmalloc_memset_const(x, y, s) memset(x, y, s)

|

|

#endif

|

|

|

|

#if __has_builtin(__builtin_assume)

|

|

#define rpmalloc_assume(cond) __builtin_assume(cond)

|

|

#elif defined(__GNUC__)

|

|

#define rpmalloc_assume(cond) \

|

|

do { \

|

|

if (!__builtin_expect(cond, 0)) \

|

|

__builtin_unreachable(); \

|

|

} while (0)

|

|

#elif defined(_MSC_VER)

|

|

#define rpmalloc_assume(cond) __assume(cond)

|

|

#else

|

|

#define rpmalloc_assume(cond) 0

|

|

#endif

|

|

|

|

#ifndef HEAP_ARRAY_SIZE

|

|

//! Size of heap hashmap

|

|

#define HEAP_ARRAY_SIZE 47

|

|

#endif

|

|

#ifndef ENABLE_THREAD_CACHE

|

|

//! Enable per-thread cache

|

|

#define ENABLE_THREAD_CACHE 1

|

|

#endif

|

|

#ifndef ENABLE_GLOBAL_CACHE

|

|

//! Enable global cache shared between all threads, requires thread cache

|

|

#define ENABLE_GLOBAL_CACHE 1

|

|

#endif

|

|

#ifndef ENABLE_VALIDATE_ARGS

|

|

//! Enable validation of args to public entry points

|

|

#define ENABLE_VALIDATE_ARGS 0

|

|

#endif

|

|

#ifndef ENABLE_STATISTICS

|

|

//! Enable statistics collection

|

|

#define ENABLE_STATISTICS 0

|

|

#endif

|

|

#ifndef ENABLE_ASSERTS

|

|

//! Enable asserts

|

|

#define ENABLE_ASSERTS 0

|

|

#endif

|

|

#ifndef ENABLE_OVERRIDE

|

|

//! Override standard library malloc/free and new/delete entry points

|

|

#define ENABLE_OVERRIDE 0

|

|

#endif

|

|

#ifndef ENABLE_PRELOAD

|

|

//! Support preloading

|

|

#define ENABLE_PRELOAD 0

|

|

#endif

|

|

#ifndef DISABLE_UNMAP

|

|

//! Disable unmapping memory pages (also enables unlimited cache)

|

|

#define DISABLE_UNMAP 0

|

|

#endif

|

|

#ifndef ENABLE_UNLIMITED_CACHE

|

|

//! Enable unlimited global cache (no unmapping until finalization)

|

|

#define ENABLE_UNLIMITED_CACHE 0

|

|

#endif

|

|

#ifndef ENABLE_ADAPTIVE_THREAD_CACHE

|

|

//! Enable adaptive thread cache size based on use heuristics

|

|

#define ENABLE_ADAPTIVE_THREAD_CACHE 0

|

|

#endif

|

|

#ifndef DEFAULT_SPAN_MAP_COUNT

|

|

//! Default number of spans to map in call to map more virtual memory (default

|

|

//! values yield 4MiB here)

|

|

#define DEFAULT_SPAN_MAP_COUNT 64

|

|

#endif

|

|

#ifndef GLOBAL_CACHE_MULTIPLIER

|

|

//! Multiplier for global cache

|

|

#define GLOBAL_CACHE_MULTIPLIER 8

|

|

#endif

|

|

|

|

#if DISABLE_UNMAP && !ENABLE_GLOBAL_CACHE

|

|

#error Must use global cache if unmap is disabled

|

|

#endif

|

|

|

|

#if DISABLE_UNMAP

|

|

#undef ENABLE_UNLIMITED_CACHE

|

|

#define ENABLE_UNLIMITED_CACHE 1

|

|

#endif

|

|

|

|

#if !ENABLE_GLOBAL_CACHE

|

|

#undef ENABLE_UNLIMITED_CACHE

|

|

#define ENABLE_UNLIMITED_CACHE 0

|

|

#endif

|

|

|

|

#if !ENABLE_THREAD_CACHE

|

|

#undef ENABLE_ADAPTIVE_THREAD_CACHE

|

|

#define ENABLE_ADAPTIVE_THREAD_CACHE 0

|

|

#endif

|

|

|

|

#if defined(_WIN32) || defined(__WIN32__) || defined(_WIN64)

|

|

#define PLATFORM_WINDOWS 1

|

|

#define PLATFORM_POSIX 0

|

|

#else

|

|

#define PLATFORM_WINDOWS 0

|

|

#define PLATFORM_POSIX 1

|

|

#endif

|

|

|

|

/// Platform and arch specifics

|

|

#if defined(_MSC_VER) && !defined(__clang__)

|

|

#pragma warning(disable : 5105)

|

|

#ifndef FORCEINLINE

|

|

#define FORCEINLINE inline __forceinline

|

|

#endif

|

|

#define _Static_assert static_assert

|

|

#else

|

|

#ifndef FORCEINLINE

|

|

#define FORCEINLINE inline __attribute__((__always_inline__))

|

|

#endif

|

|

#endif

|

|

#if PLATFORM_WINDOWS

|

|

#ifndef WIN32_LEAN_AND_MEAN

|

|

#define WIN32_LEAN_AND_MEAN

|

|

#endif

|

|

#include <windows.h>

|

|

#if ENABLE_VALIDATE_ARGS

|

|

#include <intsafe.h>

|

|

#endif

|

|

#else

|

|

#include <stdio.h>

|

|

#include <stdlib.h>

|

|

#include <time.h>

|

|

#include <unistd.h>

|

|

#if defined(__linux__) || defined(__ANDROID__)

|

|

#include <sys/prctl.h>

|

|

#if !defined(PR_SET_VMA)

|

|

#define PR_SET_VMA 0x53564d41

|

|

#define PR_SET_VMA_ANON_NAME 0

|

|

#endif

|

|

#endif

|

|

#if defined(__APPLE__)

|

|

#include <TargetConditionals.h>

|

|

#if !TARGET_OS_IPHONE && !TARGET_OS_SIMULATOR

|

|

#include <mach/mach_vm.h>

|

|

#include <mach/vm_statistics.h>

|

|

#endif

|

|

#include <pthread.h>

|

|

#endif

|

|

#if defined(__HAIKU__) || defined(__TINYC__)

|

|

#include <pthread.h>

|

|

#endif

|

|

#endif

|

|

|

|

#include <errno.h>

|

|

#include <stdint.h>

|

|

#include <string.h>

|

|

|

|

#if defined(_WIN32) && (!defined(BUILD_DYNAMIC_LINK) || !BUILD_DYNAMIC_LINK)

|

|

#include <fibersapi.h>

|

|

static DWORD fls_key;

|

|

#endif

|

|

|

|

#if PLATFORM_POSIX

|

|

#include <sched.h>

|

|

#include <sys/mman.h>

|

|

#ifdef __FreeBSD__

|

|

#include <sys/sysctl.h>

|

|

#define MAP_HUGETLB MAP_ALIGNED_SUPER

|

|

#ifndef PROT_MAX

|

|

#define PROT_MAX(f) 0

|

|

#endif

|

|

#else

|

|

#define PROT_MAX(f) 0

|

|

#endif

|

|

#ifdef __sun

|

|

extern int madvise(caddr_t, size_t, int);

|

|

#endif

|

|

#ifndef MAP_UNINITIALIZED

|

|

#define MAP_UNINITIALIZED 0

|

|

#endif

|

|

#endif

|

|

#include <errno.h>

|

|

|

|

#if ENABLE_ASSERTS

|

|

#undef NDEBUG

|

|

#if defined(_MSC_VER) && !defined(_DEBUG)

|

|

#define _DEBUG

|

|

#endif

|

|

#include <assert.h>

|

|

#define RPMALLOC_TOSTRING_M(x) #x

|

|

#define RPMALLOC_TOSTRING(x) RPMALLOC_TOSTRING_M(x)

|

|

#define rpmalloc_assert(truth, message) \

|

|

do { \

|

|

if (!(truth)) { \

|

|

if (_memory_config.error_callback) { \

|

|

_memory_config.error_callback(message " (" RPMALLOC_TOSTRING( \

|

|

truth) ") at " __FILE__ ":" RPMALLOC_TOSTRING(__LINE__)); \

|

|

} else { \

|

|

assert((truth) && message); \

|

|

} \

|

|

} \

|

|

} while (0)

|

|

#else

|

|

#define rpmalloc_assert(truth, message) \

|

|

do { \

|

|

} while (0)

|

|

#endif

|

|

#if ENABLE_STATISTICS

|

|

#include <stdio.h>

|

|

#endif

|

|

|

|

//////

|

|

///

|

|

/// Atomic access abstraction (since MSVC does not do C11 yet)

|

|

///

|

|

//////

|

|

|

|

#if defined(_MSC_VER) && !defined(__clang__)

|

|

|

|

typedef volatile long atomic32_t;

|

|

typedef volatile long long atomic64_t;

|

|

typedef volatile void *atomicptr_t;

|

|

|

|

static FORCEINLINE int32_t atomic_load32(atomic32_t *src) { return *src; }

|

|

static FORCEINLINE void atomic_store32(atomic32_t *dst, int32_t val) {

|

|

*dst = val;

|

|

}

|

|

static FORCEINLINE int32_t atomic_incr32(atomic32_t *val) {

|

|

return (int32_t)InterlockedIncrement(val);

|

|

}

|

|

static FORCEINLINE int32_t atomic_decr32(atomic32_t *val) {

|

|

return (int32_t)InterlockedDecrement(val);

|

|

}

|

|

static FORCEINLINE int32_t atomic_add32(atomic32_t *val, int32_t add) {

|

|

return (int32_t)InterlockedExchangeAdd(val, add) + add;

|

|

}

|

|

static FORCEINLINE int atomic_cas32_acquire(atomic32_t *dst, int32_t val,

|

|

int32_t ref) {

|

|

return (InterlockedCompareExchange(dst, val, ref) == ref) ? 1 : 0;

|

|

}

|

|

static FORCEINLINE void atomic_store32_release(atomic32_t *dst, int32_t val) {

|

|

*dst = val;

|

|

}

|

|

static FORCEINLINE int64_t atomic_load64(atomic64_t *src) { return *src; }

|

|

static FORCEINLINE int64_t atomic_add64(atomic64_t *val, int64_t add) {

|

|

return (int64_t)InterlockedExchangeAdd64(val, add) + add;

|

|

}

|

|

static FORCEINLINE void *atomic_load_ptr(atomicptr_t *src) {

|

|

return (void *)*src;

|

|

}

|

|

static FORCEINLINE void atomic_store_ptr(atomicptr_t *dst, void *val) {

|

|

*dst = val;

|

|

}

|

|

static FORCEINLINE void atomic_store_ptr_release(atomicptr_t *dst, void *val) {

|

|

*dst = val;

|

|

}

|

|

static FORCEINLINE void *atomic_exchange_ptr_acquire(atomicptr_t *dst,

|

|

void *val) {

|

|

return (void *)InterlockedExchangePointer((void *volatile *)dst, val);

|

|

}

|

|

static FORCEINLINE int atomic_cas_ptr(atomicptr_t *dst, void *val, void *ref) {

|

|

return (InterlockedCompareExchangePointer((void *volatile *)dst, val, ref) ==

|

|

ref)

|

|

? 1

|

|

: 0;

|

|

}

|

|

|

|

#define EXPECTED(x) (x)

|

|

#define UNEXPECTED(x) (x)

|

|

|

|

#else

|

|

|

|

#include <stdatomic.h>

|

|

|

|

typedef volatile _Atomic(int32_t) atomic32_t;

|

|

typedef volatile _Atomic(int64_t) atomic64_t;

|

|

typedef volatile _Atomic(void *) atomicptr_t;

|

|

|

|

static FORCEINLINE int32_t atomic_load32(atomic32_t *src) {

|

|

return atomic_load_explicit(src, memory_order_relaxed);

|

|

}

|

|

static FORCEINLINE void atomic_store32(atomic32_t *dst, int32_t val) {

|

|

atomic_store_explicit(dst, val, memory_order_relaxed);

|

|

}

|

|

static FORCEINLINE int32_t atomic_incr32(atomic32_t *val) {

|

|

return atomic_fetch_add_explicit(val, 1, memory_order_relaxed) + 1;

|

|

}

|

|

static FORCEINLINE int32_t atomic_decr32(atomic32_t *val) {

|

|

return atomic_fetch_add_explicit(val, -1, memory_order_relaxed) - 1;

|

|

}

|

|

static FORCEINLINE int32_t atomic_add32(atomic32_t *val, int32_t add) {

|

|

return atomic_fetch_add_explicit(val, add, memory_order_relaxed) + add;

|

|

}

|

|

static FORCEINLINE int atomic_cas32_acquire(atomic32_t *dst, int32_t val,

|

|

int32_t ref) {

|

|

return atomic_compare_exchange_weak_explicit(

|

|

dst, &ref, val, memory_order_acquire, memory_order_relaxed);

|

|

}

|

|

static FORCEINLINE void atomic_store32_release(atomic32_t *dst, int32_t val) {

|

|

atomic_store_explicit(dst, val, memory_order_release);

|

|

}

|

|

static FORCEINLINE int64_t atomic_load64(atomic64_t *val) {

|

|

return atomic_load_explicit(val, memory_order_relaxed);

|

|

}

|

|

static FORCEINLINE int64_t atomic_add64(atomic64_t *val, int64_t add) {

|

|

return atomic_fetch_add_explicit(val, add, memory_order_relaxed) + add;

|

|

}

|

|

static FORCEINLINE void *atomic_load_ptr(atomicptr_t *src) {

|

|

return atomic_load_explicit(src, memory_order_relaxed);

|

|

}

|

|

static FORCEINLINE void atomic_store_ptr(atomicptr_t *dst, void *val) {

|

|

atomic_store_explicit(dst, val, memory_order_relaxed);

|

|

}

|

|

static FORCEINLINE void atomic_store_ptr_release(atomicptr_t *dst, void *val) {

|

|

atomic_store_explicit(dst, val, memory_order_release);

|

|

}

|

|

static FORCEINLINE void *atomic_exchange_ptr_acquire(atomicptr_t *dst,

|

|

void *val) {

|

|

return atomic_exchange_explicit(dst, val, memory_order_acquire);

|

|

}

|

|

static FORCEINLINE int atomic_cas_ptr(atomicptr_t *dst, void *val, void *ref) {

|

|

return atomic_compare_exchange_weak_explicit(

|

|

dst, &ref, val, memory_order_relaxed, memory_order_relaxed);

|

|

}

|

|

|

|

#define EXPECTED(x) __builtin_expect((x), 1)

|

|

#define UNEXPECTED(x) __builtin_expect((x), 0)

|

|

|

|

#endif

|

|

|

|

////////////

|

|

///

|

|

/// Statistics related functions (evaluate to nothing when statistics not

|

|

/// enabled)

|

|

///

|

|

//////

|

|

|

|

#if ENABLE_STATISTICS

|

|

#define _rpmalloc_stat_inc(counter) atomic_incr32(counter)

|

|

#define _rpmalloc_stat_dec(counter) atomic_decr32(counter)

|

|

#define _rpmalloc_stat_add(counter, value) \

|

|

atomic_add32(counter, (int32_t)(value))

|

|

#define _rpmalloc_stat_add64(counter, value) \

|

|

atomic_add64(counter, (int64_t)(value))

|

|

#define _rpmalloc_stat_add_peak(counter, value, peak) \

|

|

do { \

|

|

int32_t _cur_count = atomic_add32(counter, (int32_t)(value)); \

|

|

if (_cur_count > (peak)) \

|

|

peak = _cur_count; \

|

|

} while (0)

|

|

#define _rpmalloc_stat_sub(counter, value) \

|

|

atomic_add32(counter, -(int32_t)(value))

|

|

#define _rpmalloc_stat_inc_alloc(heap, class_idx) \

|

|

do { \

|

|

int32_t alloc_current = \

|

|

atomic_incr32(&heap->size_class_use[class_idx].alloc_current); \

|

|

if (alloc_current > heap->size_class_use[class_idx].alloc_peak) \

|

|

heap->size_class_use[class_idx].alloc_peak = alloc_current; \

|

|

atomic_incr32(&heap->size_class_use[class_idx].alloc_total); \

|

|

} while (0)

|

|

#define _rpmalloc_stat_inc_free(heap, class_idx) \

|

|

do { \

|

|

atomic_decr32(&heap->size_class_use[class_idx].alloc_current); \

|

|

atomic_incr32(&heap->size_class_use[class_idx].free_total); \

|

|

} while (0)

|

|

#else

|

|

#define _rpmalloc_stat_inc(counter) \

|

|

do { \

|

|

} while (0)

|

|

#define _rpmalloc_stat_dec(counter) \

|

|

do { \

|

|

} while (0)

|

|

#define _rpmalloc_stat_add(counter, value) \

|

|

do { \

|

|

} while (0)

|

|

#define _rpmalloc_stat_add64(counter, value) \

|

|

do { \

|

|

} while (0)

|

|

#define _rpmalloc_stat_add_peak(counter, value, peak) \

|

|

do { \

|

|

} while (0)

|

|

#define _rpmalloc_stat_sub(counter, value) \

|

|

do { \

|

|

} while (0)

|

|

#define _rpmalloc_stat_inc_alloc(heap, class_idx) \

|

|

do { \

|

|

} while (0)

|

|

#define _rpmalloc_stat_inc_free(heap, class_idx) \

|

|

do { \

|

|

} while (0)

|

|

#endif

|

|

|

|

///

|

|

/// Preconfigured limits and sizes

|

|

///

|

|

|

|

//! Granularity of a small allocation block (must be power of two)

|

|

#define SMALL_GRANULARITY 16

|

|

//! Small granularity shift count

|

|

#define SMALL_GRANULARITY_SHIFT 4

|

|

//! Number of small block size classes

|

|

#define SMALL_CLASS_COUNT 65

|

|

//! Maximum size of a small block

|

|

#define SMALL_SIZE_LIMIT (SMALL_GRANULARITY * (SMALL_CLASS_COUNT - 1))

|

|

//! Granularity of a medium allocation block

|

|

#define MEDIUM_GRANULARITY 512

|

|

//! Medium granularity shift count

|

|

#define MEDIUM_GRANULARITY_SHIFT 9

|

|

//! Number of medium block size classes

|

|

#define MEDIUM_CLASS_COUNT 61

|

|

//! Total number of small + medium size classes

|

|

#define SIZE_CLASS_COUNT (SMALL_CLASS_COUNT + MEDIUM_CLASS_COUNT)

|

|

//! Number of large block size classes

|

|

#define LARGE_CLASS_COUNT 63

|

|

//! Maximum size of a medium block

|

|

#define MEDIUM_SIZE_LIMIT \

|

|

(SMALL_SIZE_LIMIT + (MEDIUM_GRANULARITY * MEDIUM_CLASS_COUNT))

|

|

//! Maximum size of a large block

|

|

#define LARGE_SIZE_LIMIT \

|

|

((LARGE_CLASS_COUNT * _memory_span_size) - SPAN_HEADER_SIZE)

|

|

//! Size of a span header (must be a multiple of SMALL_GRANULARITY and a power

|

|

//! of two)

|

|

#define SPAN_HEADER_SIZE 128

|

|

//! Number of spans in thread cache

|

|

#define MAX_THREAD_SPAN_CACHE 400

|

|

//! Number of spans to transfer between thread and global cache

|

|

#define THREAD_SPAN_CACHE_TRANSFER 64

|

|

//! Number of spans in thread cache for large spans (must be greater than

|

|

//! LARGE_CLASS_COUNT / 2)

|

|

#define MAX_THREAD_SPAN_LARGE_CACHE 100

|

|

//! Number of spans to transfer between thread and global cache for large spans

|

|

#define THREAD_SPAN_LARGE_CACHE_TRANSFER 6

|

|

|

|

_Static_assert((SMALL_GRANULARITY & (SMALL_GRANULARITY - 1)) == 0,

|

|

"Small granularity must be power of two");

|

|

_Static_assert((SPAN_HEADER_SIZE & (SPAN_HEADER_SIZE - 1)) == 0,

|

|

"Span header size must be power of two");

|

|

|

|

#if ENABLE_VALIDATE_ARGS

|

|

//! Maximum allocation size to avoid integer overflow

|

|

#undef MAX_ALLOC_SIZE

|

|

#define MAX_ALLOC_SIZE (((size_t) - 1) - _memory_span_size)

|

|

#endif

|

|

|

|

#define pointer_offset(ptr, ofs) (void *)((char *)(ptr) + (ptrdiff_t)(ofs))

|

|

#define pointer_diff(first, second) \

|

|

(ptrdiff_t)((const char *)(first) - (const char *)(second))

|

|

|

|

#define INVALID_POINTER ((void *)((uintptr_t) - 1))

|

|

|

|

#define SIZE_CLASS_LARGE SIZE_CLASS_COUNT

|

|

#define SIZE_CLASS_HUGE ((uint32_t) - 1)

|

|

|

|

////////////

|

|

///

|

|

/// Data types

|

|

///

|

|

//////

|

|

|

|

//! A memory heap, per thread

|

|

typedef struct heap_t heap_t;

|

|

//! Span of memory pages

|

|

typedef struct span_t span_t;

|

|

//! Span list

|

|

typedef struct span_list_t span_list_t;

|

|

//! Span active data

|

|

typedef struct span_active_t span_active_t;

|

|

//! Size class definition

|

|

typedef struct size_class_t size_class_t;

|

|

//! Global cache

|

|

typedef struct global_cache_t global_cache_t;

|

|

|

|

//! Flag indicating span is the first (master) span of a split superspan

|

|

#define SPAN_FLAG_MASTER 1U

|

|

//! Flag indicating span is a secondary (sub) span of a split superspan

|

|

#define SPAN_FLAG_SUBSPAN 2U

|

|

//! Flag indicating span has blocks with increased alignment

|

|

#define SPAN_FLAG_ALIGNED_BLOCKS 4U

|

|

//! Flag indicating an unmapped master span

|

|

#define SPAN_FLAG_UNMAPPED_MASTER 8U

|

|

|

|

#if ENABLE_ADAPTIVE_THREAD_CACHE || ENABLE_STATISTICS

|

|

struct span_use_t {

|

|

//! Current number of spans used (actually used, not in cache)

|

|

atomic32_t current;

|

|

//! High water mark of spans used

|

|

atomic32_t high;

|

|

#if ENABLE_STATISTICS

|

|

//! Number of spans in deferred list

|

|

atomic32_t spans_deferred;

|

|

//! Number of spans transitioned to global cache

|

|

atomic32_t spans_to_global;

|

|

//! Number of spans transitioned from global cache

|

|

atomic32_t spans_from_global;

|

|

//! Number of spans transitioned to thread cache

|

|

atomic32_t spans_to_cache;

|

|

//! Number of spans transitioned from thread cache

|

|

atomic32_t spans_from_cache;

|

|

//! Number of spans transitioned to reserved state

|

|

atomic32_t spans_to_reserved;

|

|

//! Number of spans transitioned from reserved state

|

|

atomic32_t spans_from_reserved;

|

|

//! Number of raw memory map calls

|

|

atomic32_t spans_map_calls;

|

|

#endif

|

|

};

|

|

typedef struct span_use_t span_use_t;

|

|

#endif

|

|

|

|

#if ENABLE_STATISTICS

|

|

struct size_class_use_t {

|

|

//! Current number of allocations

|

|

atomic32_t alloc_current;

|

|

//! Peak number of allocations

|

|

int32_t alloc_peak;

|

|

//! Total number of allocations

|

|

atomic32_t alloc_total;

|

|

//! Total number of frees

|

|

atomic32_t free_total;

|

|

//! Number of spans in use

|

|

atomic32_t spans_current;

|

|

//! Number of spans transitioned to cache

|

|

int32_t spans_peak;

|

|

//! Number of spans transitioned to cache

|

|

atomic32_t spans_to_cache;

|

|

//! Number of spans transitioned from cache

|

|

atomic32_t spans_from_cache;

|

|

//! Number of spans transitioned from reserved state

|

|

atomic32_t spans_from_reserved;

|

|

//! Number of spans mapped

|

|

atomic32_t spans_map_calls;

|

|

int32_t unused;

|

|

};

|

|

typedef struct size_class_use_t size_class_use_t;

|

|

#endif

|

|

|

|

// A span can either represent a single span of memory pages with size declared

|

|

// by span_map_count configuration variable, or a set of spans in a continuous

|

|

// region, a super span. Any reference to the term "span" usually refers to both

|

|

// a single span or a super span. A super span can further be divided into

|

|

// multiple spans (or this, super spans), where the first (super)span is the

|

|

// master and subsequent (super)spans are subspans. The master span keeps track

|

|

// of how many subspans that are still alive and mapped in virtual memory, and

|

|

// once all subspans and master have been unmapped the entire superspan region

|

|

// is released and unmapped (on Windows for example, the entire superspan range

|

|

// has to be released in the same call to release the virtual memory range, but

|

|

// individual subranges can be decommitted individually to reduce physical

|

|

// memory use).

|

|

struct span_t {

|

|

//! Free list

|

|

void *free_list;

|

|

//! Total block count of size class

|

|

uint32_t block_count;

|

|

//! Size class

|

|

uint32_t size_class;

|

|

//! Index of last block initialized in free list

|

|

uint32_t free_list_limit;

|

|

//! Number of used blocks remaining when in partial state

|

|

uint32_t used_count;

|

|

//! Deferred free list

|

|

atomicptr_t free_list_deferred;

|

|

//! Size of deferred free list, or list of spans when part of a cache list

|

|

uint32_t list_size;

|

|

//! Size of a block

|

|

uint32_t block_size;

|

|

//! Flags and counters

|

|

uint32_t flags;

|

|

//! Number of spans

|

|

uint32_t span_count;

|

|

//! Total span counter for master spans

|

|

uint32_t total_spans;

|

|

//! Offset from master span for subspans

|

|

uint32_t offset_from_master;

|

|

//! Remaining span counter, for master spans

|

|

atomic32_t remaining_spans;

|

|

//! Alignment offset

|

|

uint32_t align_offset;

|

|

//! Owning heap

|

|

heap_t *heap;

|

|

//! Next span

|

|

span_t *next;

|

|

//! Previous span

|

|

span_t *prev;

|

|

};

|

|

_Static_assert(sizeof(span_t) <= SPAN_HEADER_SIZE, "span size mismatch");

|

|

|

|

struct span_cache_t {

|

|

size_t count;

|

|

span_t *span[MAX_THREAD_SPAN_CACHE];

|

|

};

|

|

typedef struct span_cache_t span_cache_t;

|

|

|

|

struct span_large_cache_t {

|

|

size_t count;

|

|

span_t *span[MAX_THREAD_SPAN_LARGE_CACHE];

|

|

};

|

|

typedef struct span_large_cache_t span_large_cache_t;

|

|

|

|

struct heap_size_class_t {

|

|

//! Free list of active span

|

|

void *free_list;

|

|

//! Double linked list of partially used spans with free blocks.

|

|

// Previous span pointer in head points to tail span of list.

|

|

span_t *partial_span;

|

|

//! Early level cache of fully free spans

|

|

span_t *cache;

|

|

};

|

|

typedef struct heap_size_class_t heap_size_class_t;

|

|

|

|

// Control structure for a heap, either a thread heap or a first class heap if

|

|

// enabled

|

|

struct heap_t {

|

|

//! Owning thread ID

|

|

uintptr_t owner_thread;

|

|

//! Free lists for each size class

|

|

heap_size_class_t size_class[SIZE_CLASS_COUNT];

|

|

#if ENABLE_THREAD_CACHE

|

|

//! Arrays of fully freed spans, single span

|

|

span_cache_t span_cache;

|

|

#endif

|

|

//! List of deferred free spans (single linked list)

|

|

atomicptr_t span_free_deferred;

|

|

//! Number of full spans

|

|

size_t full_span_count;

|

|

//! Mapped but unused spans

|

|

span_t *span_reserve;

|

|

//! Master span for mapped but unused spans

|

|

span_t *span_reserve_master;

|

|

//! Number of mapped but unused spans

|

|

uint32_t spans_reserved;

|

|

//! Child count

|

|

atomic32_t child_count;

|

|

//! Next heap in id list

|

|

heap_t *next_heap;

|

|

//! Next heap in orphan list

|

|

heap_t *next_orphan;

|

|

//! Heap ID

|

|

int32_t id;

|

|

//! Finalization state flag

|

|

int finalize;

|

|

//! Master heap owning the memory pages

|

|

heap_t *master_heap;

|

|

#if ENABLE_THREAD_CACHE

|

|

//! Arrays of fully freed spans, large spans with > 1 span count

|

|

span_large_cache_t span_large_cache[LARGE_CLASS_COUNT - 1];

|

|

#endif

|

|

#if RPMALLOC_FIRST_CLASS_HEAPS

|

|

//! Double linked list of fully utilized spans with free blocks for each size

|

|

//! class.

|

|

// Previous span pointer in head points to tail span of list.

|

|

span_t *full_span[SIZE_CLASS_COUNT];

|

|

//! Double linked list of large and huge spans allocated by this heap

|

|

span_t *large_huge_span;

|

|

#endif

|

|

#if ENABLE_ADAPTIVE_THREAD_CACHE || ENABLE_STATISTICS

|

|

//! Current and high water mark of spans used per span count

|

|

span_use_t span_use[LARGE_CLASS_COUNT];

|

|

#endif

|

|

#if ENABLE_STATISTICS

|

|

//! Allocation stats per size class

|

|

size_class_use_t size_class_use[SIZE_CLASS_COUNT + 1];

|

|

//! Number of bytes transitioned thread -> global

|

|

atomic64_t thread_to_global;

|

|

//! Number of bytes transitioned global -> thread

|

|

atomic64_t global_to_thread;

|

|

#endif

|

|

};

|

|

|

|

// Size class for defining a block size bucket

|

|

struct size_class_t {

|

|

//! Size of blocks in this class

|

|

uint32_t block_size;

|

|

//! Number of blocks in each chunk

|

|

uint16_t block_count;

|

|

//! Class index this class is merged with

|

|

uint16_t class_idx;

|

|

};

|

|

_Static_assert(sizeof(size_class_t) == 8, "Size class size mismatch");

|

|

|

|

struct global_cache_t {

|

|

//! Cache lock

|

|

atomic32_t lock;

|

|

//! Cache count

|

|

uint32_t count;

|

|

#if ENABLE_STATISTICS

|

|

//! Insert count

|

|

size_t insert_count;

|

|

//! Extract count

|

|

size_t extract_count;

|

|

#endif

|

|

//! Cached spans

|

|

span_t *span[GLOBAL_CACHE_MULTIPLIER * MAX_THREAD_SPAN_CACHE];

|

|

//! Unlimited cache overflow

|

|

span_t *overflow;

|

|

};

|

|

|

|

////////////

|

|

///

|

|

/// Global data

|

|

///

|

|

//////

|

|

|

|

//! Default span size (64KiB)

|

|

#define _memory_default_span_size (64 * 1024)

|

|

#define _memory_default_span_size_shift 16

|

|

#define _memory_default_span_mask (~((uintptr_t)(_memory_span_size - 1)))

|

|

|

|

//! Initialized flag

|

|

static int _rpmalloc_initialized;

|

|

//! Main thread ID

|

|

static uintptr_t _rpmalloc_main_thread_id;

|

|

//! Configuration

|

|

static rpmalloc_config_t _memory_config;

|

|

//! Memory page size

|

|

static size_t _memory_page_size;

|

|

//! Shift to divide by page size

|

|

static size_t _memory_page_size_shift;

|

|

//! Granularity at which memory pages are mapped by OS

|

|

static size_t _memory_map_granularity;

|

|

#if RPMALLOC_CONFIGURABLE

|

|

//! Size of a span of memory pages

|

|

static size_t _memory_span_size;

|

|

//! Shift to divide by span size

|

|

static size_t _memory_span_size_shift;

|

|

//! Mask to get to start of a memory span

|

|

static uintptr_t _memory_span_mask;

|

|

#else

|

|

//! Hardwired span size

|

|

#define _memory_span_size _memory_default_span_size

|

|

#define _memory_span_size_shift _memory_default_span_size_shift

|

|

#define _memory_span_mask _memory_default_span_mask

|

|

#endif

|

|

//! Number of spans to map in each map call

|

|

static size_t _memory_span_map_count;

|

|

//! Number of spans to keep reserved in each heap

|

|

static size_t _memory_heap_reserve_count;

|

|

//! Global size classes

|

|

static size_class_t _memory_size_class[SIZE_CLASS_COUNT];

|

|

//! Run-time size limit of medium blocks

|

|

static size_t _memory_medium_size_limit;

|

|

//! Heap ID counter

|

|

static atomic32_t _memory_heap_id;

|

|

//! Huge page support

|

|

static int _memory_huge_pages;

|

|

#if ENABLE_GLOBAL_CACHE

|

|

//! Global span cache

|

|

static global_cache_t _memory_span_cache[LARGE_CLASS_COUNT];

|

|

#endif

|

|

//! Global reserved spans

|

|

static span_t *_memory_global_reserve;

|

|

//! Global reserved count

|

|

static size_t _memory_global_reserve_count;

|

|

//! Global reserved master

|

|

static span_t *_memory_global_reserve_master;

|

|

//! All heaps

|

|

static heap_t *_memory_heaps[HEAP_ARRAY_SIZE];

|

|

//! Used to restrict access to mapping memory for huge pages

|

|

static atomic32_t _memory_global_lock;

|

|

//! Orphaned heaps

|

|

static heap_t *_memory_orphan_heaps;

|

|

#if RPMALLOC_FIRST_CLASS_HEAPS

|

|

//! Orphaned heaps (first class heaps)

|

|

static heap_t *_memory_first_class_orphan_heaps;

|

|

#endif

|

|

#if ENABLE_STATISTICS

|

|

//! Allocations counter

|

|

static atomic64_t _allocation_counter;

|

|

//! Deallocations counter

|

|

static atomic64_t _deallocation_counter;

|

|

//! Active heap count

|

|

static atomic32_t _memory_active_heaps;

|

|

//! Number of currently mapped memory pages

|

|

static atomic32_t _mapped_pages;

|

|

//! Peak number of concurrently mapped memory pages

|

|

static int32_t _mapped_pages_peak;

|

|

//! Number of mapped master spans

|

|

static atomic32_t _master_spans;

|

|

//! Number of unmapped dangling master spans

|

|

static atomic32_t _unmapped_master_spans;

|

|

//! Running counter of total number of mapped memory pages since start

|

|

static atomic32_t _mapped_total;

|

|

//! Running counter of total number of unmapped memory pages since start

|

|

static atomic32_t _unmapped_total;

|

|

//! Number of currently mapped memory pages in OS calls

|

|

static atomic32_t _mapped_pages_os;

|

|

//! Number of currently allocated pages in huge allocations

|

|

static atomic32_t _huge_pages_current;

|

|

//! Peak number of currently allocated pages in huge allocations

|

|

static int32_t _huge_pages_peak;

|

|

#endif

|

|

|

|

////////////

|

|

///

|

|

/// Thread local heap and ID

|

|

///

|

|

//////

|

|

|

|

//! Current thread heap

|

|

#if ((defined(__APPLE__) || defined(__HAIKU__)) && ENABLE_PRELOAD) || \

|

|

defined(__TINYC__)

|

|

static pthread_key_t _memory_thread_heap;

|

|

#else

|

|

#ifdef _MSC_VER

|

|

#define _Thread_local __declspec(thread)

|

|

#define TLS_MODEL

|

|

#else

|

|

#ifndef __HAIKU__

|

|

#define TLS_MODEL __attribute__((tls_model("initial-exec")))

|

|

#else

|

|

#define TLS_MODEL

|

|

#endif

|

|

#if !defined(__clang__) && defined(__GNUC__)

|

|

#define _Thread_local __thread

|

|

#endif

|

|

#endif

|

|

static _Thread_local heap_t *_memory_thread_heap TLS_MODEL;

|

|

#endif

|

|

|

|

static inline heap_t *get_thread_heap_raw(void) {

|

|

#if (defined(__APPLE__) || defined(__HAIKU__)) && ENABLE_PRELOAD

|

|

return pthread_getspecific(_memory_thread_heap);

|

|

#else

|

|

return _memory_thread_heap;

|

|

#endif

|

|

}

|

|

|

|

//! Get the current thread heap

|

|

static inline heap_t *get_thread_heap(void) {

|

|

heap_t *heap = get_thread_heap_raw();

|

|

#if ENABLE_PRELOAD

|

|

if (EXPECTED(heap != 0))

|

|

return heap;

|

|

rpmalloc_initialize();

|

|

return get_thread_heap_raw();

|

|

#else

|

|

return heap;

|

|

#endif

|

|

}

|

|

|

|

//! Fast thread ID

|

|

static inline uintptr_t get_thread_id(void) {

|

|

#if defined(_WIN32)

|

|

return (uintptr_t)((void *)NtCurrentTeb());

|

|

#elif (defined(__GNUC__) || defined(__clang__)) && !defined(__CYGWIN__)

|

|

uintptr_t tid;

|

|

#if defined(__i386__)

|

|

__asm__("movl %%gs:0, %0" : "=r"(tid) : :);

|

|

#elif defined(__x86_64__)

|

|

#if defined(__MACH__)

|

|

__asm__("movq %%gs:0, %0" : "=r"(tid) : :);

|

|

#else

|

|

__asm__("movq %%fs:0, %0" : "=r"(tid) : :);

|

|

#endif

|

|

#elif defined(__arm__)

|

|

__asm__ volatile("mrc p15, 0, %0, c13, c0, 3" : "=r"(tid));

|

|

#elif defined(__aarch64__)

|

|

#if defined(__MACH__)

|

|

// tpidr_el0 likely unused, always return 0 on iOS

|

|

__asm__ volatile("mrs %0, tpidrro_el0" : "=r"(tid));

|

|

#else

|

|

__asm__ volatile("mrs %0, tpidr_el0" : "=r"(tid));

|

|

#endif

|

|

#else

|

|

#error This platform needs implementation of get_thread_id()

|

|

#endif

|

|

return tid;

|

|

#else

|

|

#error This platform needs implementation of get_thread_id()

|

|

#endif

|

|

}

|

|

|

|

//! Set the current thread heap

|

|

static void set_thread_heap(heap_t *heap) {

|

|

#if ((defined(__APPLE__) || defined(__HAIKU__)) && ENABLE_PRELOAD) || \

|

|

defined(__TINYC__)

|

|

pthread_setspecific(_memory_thread_heap, heap);

|

|

#else

|

|

_memory_thread_heap = heap;

|

|

#endif

|

|

if (heap)

|

|

heap->owner_thread = get_thread_id();

|

|

}

|

|

|

|

//! Set main thread ID

|

|

extern void rpmalloc_set_main_thread(void);

|

|

|

|

void rpmalloc_set_main_thread(void) {

|

|

_rpmalloc_main_thread_id = get_thread_id();

|

|

}

|

|

|

|

static void _rpmalloc_spin(void) {

|

|

#if defined(_MSC_VER)

|

|

#if defined(_M_ARM64)

|

|

__yield();

|

|

#else

|

|

_mm_pause();

|

|

#endif

|

|

#elif defined(__x86_64__) || defined(__i386__)

|

|

__asm__ volatile("pause" ::: "memory");

|

|

#elif defined(__aarch64__) || (defined(__arm__) && __ARM_ARCH >= 7)

|

|

__asm__ volatile("yield" ::: "memory");

|

|

#elif defined(__powerpc__) || defined(__powerpc64__)

|

|

// No idea if ever been compiled in such archs but ... as precaution

|

|

__asm__ volatile("or 27,27,27");

|

|

#elif defined(__sparc__)

|

|

__asm__ volatile("rd %ccr, %g0 \n\trd %ccr, %g0 \n\trd %ccr, %g0");

|

|

#else

|

|

struct timespec ts = {0};

|

|

nanosleep(&ts, 0);

|

|

#endif

|

|

}

|

|

|

|

#if defined(_WIN32) && (!defined(BUILD_DYNAMIC_LINK) || !BUILD_DYNAMIC_LINK)

|

|

static void NTAPI _rpmalloc_thread_destructor(void *value) {

|

|

#if ENABLE_OVERRIDE

|

|

// If this is called on main thread it means rpmalloc_finalize

|

|

// has not been called and shutdown is forced (through _exit) or unclean

|

|

if (get_thread_id() == _rpmalloc_main_thread_id)

|

|

return;

|

|

#endif

|

|

if (value)

|

|

rpmalloc_thread_finalize(1);

|

|

}

|

|

#endif

|

|

|

|

////////////

|

|

///

|

|

/// Low level memory map/unmap

|

|

///

|

|

//////

|

|

|

|

static void _rpmalloc_set_name(void *address, size_t size) {

|

|

#if defined(__linux__) || defined(__ANDROID__)

|

|

const char *name = _memory_huge_pages ? _memory_config.huge_page_name

|

|

: _memory_config.page_name;

|

|

if (address == MAP_FAILED || !name)

|

|

return;

|

|

// If the kernel does not support CONFIG_ANON_VMA_NAME or if the call fails

|

|

// (e.g. invalid name) it is a no-op basically.

|

|

(void)prctl(PR_SET_VMA, PR_SET_VMA_ANON_NAME, (uintptr_t)address, size,

|

|

(uintptr_t)name);

|

|

#else

|

|

(void)sizeof(size);

|

|

(void)sizeof(address);

|

|

#endif

|

|

}

|

|

|

|

//! Map more virtual memory

|

|

// size is number of bytes to map

|

|

// offset receives the offset in bytes from start of mapped region

|

|

// returns address to start of mapped region to use

|

|

static void *_rpmalloc_mmap(size_t size, size_t *offset) {

|

|

rpmalloc_assert(!(size % _memory_page_size), "Invalid mmap size");

|

|

rpmalloc_assert(size >= _memory_page_size, "Invalid mmap size");

|

|

void *address = _memory_config.memory_map(size, offset);

|

|

if (EXPECTED(address != 0)) {

|

|

_rpmalloc_stat_add_peak(&_mapped_pages, (size >> _memory_page_size_shift),

|

|

_mapped_pages_peak);

|

|

_rpmalloc_stat_add(&_mapped_total, (size >> _memory_page_size_shift));

|

|

}

|

|

return address;

|

|

}

|

|

|

|

//! Unmap virtual memory

|

|

// address is the memory address to unmap, as returned from _memory_map

|

|

// size is the number of bytes to unmap, which might be less than full region

|

|

// for a partial unmap offset is the offset in bytes to the actual mapped

|

|

// region, as set by _memory_map release is set to 0 for partial unmap, or size

|

|

// of entire range for a full unmap

|

|

static void _rpmalloc_unmap(void *address, size_t size, size_t offset,

|

|

size_t release) {

|

|

rpmalloc_assert(!release || (release >= size), "Invalid unmap size");

|

|

rpmalloc_assert(!release || (release >= _memory_page_size),

|

|

"Invalid unmap size");

|

|

if (release) {

|

|

rpmalloc_assert(!(release % _memory_page_size), "Invalid unmap size");

|

|

_rpmalloc_stat_sub(&_mapped_pages, (release >> _memory_page_size_shift));

|

|

_rpmalloc_stat_add(&_unmapped_total, (release >> _memory_page_size_shift));

|

|

}

|

|

_memory_config.memory_unmap(address, size, offset, release);

|

|

}

|

|

|

|

//! Default implementation to map new pages to virtual memory

|

|

static void *_rpmalloc_mmap_os(size_t size, size_t *offset) {

|

|

// Either size is a heap (a single page) or a (multiple) span - we only need

|

|

// to align spans, and only if larger than map granularity

|

|

size_t padding = ((size >= _memory_span_size) &&

|

|

(_memory_span_size > _memory_map_granularity))

|

|

? _memory_span_size

|

|

: 0;

|

|

rpmalloc_assert(size >= _memory_page_size, "Invalid mmap size");

|

|

#if PLATFORM_WINDOWS

|

|

// Ok to MEM_COMMIT - according to MSDN, "actual physical pages are not

|

|

// allocated unless/until the virtual addresses are actually accessed"

|

|

void *ptr = VirtualAlloc(0, size + padding,

|

|

(_memory_huge_pages ? MEM_LARGE_PAGES : 0) |

|

|

MEM_RESERVE | MEM_COMMIT,

|

|

PAGE_READWRITE);

|

|

if (!ptr) {

|

|

if (_memory_config.map_fail_callback) {

|

|

if (_memory_config.map_fail_callback(size + padding))

|

|

return _rpmalloc_mmap_os(size, offset);

|

|

} else {

|

|

rpmalloc_assert(ptr, "Failed to map virtual memory block");

|

|

}

|

|

return 0;

|

|

}

|

|

#else

|

|

int flags = MAP_PRIVATE | MAP_ANONYMOUS | MAP_UNINITIALIZED;

|

|

#if defined(__APPLE__) && !TARGET_OS_IPHONE && !TARGET_OS_SIMULATOR

|

|

int fd = (int)VM_MAKE_TAG(240U);

|

|

if (_memory_huge_pages)

|

|

fd |= VM_FLAGS_SUPERPAGE_SIZE_2MB;

|

|

void *ptr = mmap(0, size + padding, PROT_READ | PROT_WRITE, flags, fd, 0);

|

|

#elif defined(MAP_HUGETLB)

|

|

void *ptr = mmap(0, size + padding,

|

|

PROT_READ | PROT_WRITE | PROT_MAX(PROT_READ | PROT_WRITE),

|

|

(_memory_huge_pages ? MAP_HUGETLB : 0) | flags, -1, 0);

|

|

#if defined(MADV_HUGEPAGE)

|

|

// In some configurations, huge pages allocations might fail thus

|

|

// we fallback to normal allocations and promote the region as transparent

|

|

// huge page

|

|

if ((ptr == MAP_FAILED || !ptr) && _memory_huge_pages) {

|

|

ptr = mmap(0, size + padding, PROT_READ | PROT_WRITE, flags, -1, 0);

|

|

if (ptr && ptr != MAP_FAILED) {

|

|

int prm = madvise(ptr, size + padding, MADV_HUGEPAGE);

|

|

(void)prm;

|

|

rpmalloc_assert((prm == 0), "Failed to promote the page to THP");

|

|

}

|

|

}

|

|

#endif

|

|

_rpmalloc_set_name(ptr, size + padding);

|

|

#elif defined(MAP_ALIGNED)

|

|

const size_t align =

|

|

(sizeof(size_t) * 8) - (size_t)(__builtin_clzl(size - 1));

|

|

void *ptr =

|

|

mmap(0, size + padding, PROT_READ | PROT_WRITE,

|

|

(_memory_huge_pages ? MAP_ALIGNED(align) : 0) | flags, -1, 0);

|

|

#elif defined(MAP_ALIGN)

|

|

caddr_t base = (_memory_huge_pages ? (caddr_t)(4 << 20) : 0);

|

|

void *ptr = mmap(base, size + padding, PROT_READ | PROT_WRITE,

|

|

(_memory_huge_pages ? MAP_ALIGN : 0) | flags, -1, 0);

|

|

#else

|

|

void *ptr = mmap(0, size + padding, PROT_READ | PROT_WRITE, flags, -1, 0);

|

|

#endif

|

|

if ((ptr == MAP_FAILED) || !ptr) {

|

|

if (_memory_config.map_fail_callback) {

|

|

if (_memory_config.map_fail_callback(size + padding))

|

|

return _rpmalloc_mmap_os(size, offset);

|

|

} else if (errno != ENOMEM) {

|

|

rpmalloc_assert((ptr != MAP_FAILED) && ptr,

|

|

"Failed to map virtual memory block");

|

|

}

|

|

return 0;

|

|

}

|

|

#endif

|

|

_rpmalloc_stat_add(&_mapped_pages_os,

|

|

(int32_t)((size + padding) >> _memory_page_size_shift));

|

|

if (padding) {

|

|

size_t final_padding = padding - ((uintptr_t)ptr & ~_memory_span_mask);

|

|

rpmalloc_assert(final_padding <= _memory_span_size,

|

|

"Internal failure in padding");

|

|

rpmalloc_assert(final_padding <= padding, "Internal failure in padding");

|

|

rpmalloc_assert(!(final_padding % 8), "Internal failure in padding");

|

|

ptr = pointer_offset(ptr, final_padding);

|

|

*offset = final_padding >> 3;

|

|

}

|

|

rpmalloc_assert((size < _memory_span_size) ||

|

|

!((uintptr_t)ptr & ~_memory_span_mask),

|

|

"Internal failure in padding");

|

|

return ptr;

|

|

}

|

|

|

|

//! Default implementation to unmap pages from virtual memory

|

|

static void _rpmalloc_unmap_os(void *address, size_t size, size_t offset,

|

|

size_t release) {

|

|

rpmalloc_assert(release || (offset == 0), "Invalid unmap size");

|

|

rpmalloc_assert(!release || (release >= _memory_page_size),

|

|

"Invalid unmap size");

|

|

rpmalloc_assert(size >= _memory_page_size, "Invalid unmap size");

|

|

if (release && offset) {

|

|

offset <<= 3;

|

|

address = pointer_offset(address, -(int32_t)offset);

|

|

if ((release >= _memory_span_size) &&

|

|

(_memory_span_size > _memory_map_granularity)) {

|

|

// Padding is always one span size

|

|

release += _memory_span_size;

|

|

}

|

|

}

|

|

#if !DISABLE_UNMAP

|

|

#if PLATFORM_WINDOWS

|

|

if (!VirtualFree(address, release ? 0 : size,

|

|

release ? MEM_RELEASE : MEM_DECOMMIT)) {

|

|

rpmalloc_assert(0, "Failed to unmap virtual memory block");

|

|

}

|

|

#else

|

|

if (release) {

|

|

if (munmap(address, release)) {

|

|

rpmalloc_assert(0, "Failed to unmap virtual memory block");

|

|

}

|

|

} else {

|

|

#if defined(MADV_FREE_REUSABLE)

|

|

int ret;

|

|

while ((ret = madvise(address, size, MADV_FREE_REUSABLE)) == -1 &&

|

|

(errno == EAGAIN))

|

|

errno = 0;

|

|

if ((ret == -1) && (errno != 0)) {

|

|

#elif defined(MADV_DONTNEED)

|

|

if (madvise(address, size, MADV_DONTNEED)) {

|

|

#elif defined(MADV_PAGEOUT)

|

|

if (madvise(address, size, MADV_PAGEOUT)) {

|

|

#elif defined(MADV_FREE)

|

|

if (madvise(address, size, MADV_FREE)) {

|

|

#else

|

|

if (posix_madvise(address, size, POSIX_MADV_DONTNEED)) {

|

|

#endif

|

|

rpmalloc_assert(0, "Failed to madvise virtual memory block as free");

|

|

}

|

|

}

|

|

#endif

|

|

#endif

|

|

if (release)

|

|

_rpmalloc_stat_sub(&_mapped_pages_os, release >> _memory_page_size_shift);

|

|

}

|

|

|

|

static void _rpmalloc_span_mark_as_subspan_unless_master(span_t *master,

|

|

span_t *subspan,

|

|

size_t span_count);

|

|

|

|

//! Use global reserved spans to fulfill a memory map request (reserve size must

|

|

//! be checked by caller)

|

|

static span_t *_rpmalloc_global_get_reserved_spans(size_t span_count) {

|

|

span_t *span = _memory_global_reserve;

|

|

_rpmalloc_span_mark_as_subspan_unless_master(_memory_global_reserve_master,

|

|

span, span_count);

|

|

_memory_global_reserve_count -= span_count;

|

|

if (_memory_global_reserve_count)

|

|

_memory_global_reserve =

|

|

(span_t *)pointer_offset(span, span_count << _memory_span_size_shift);

|

|

else

|

|

_memory_global_reserve = 0;

|

|

return span;

|

|

}

|

|

|

|

//! Store the given spans as global reserve (must only be called from within new

|

|

//! heap allocation, not thread safe)

|

|

static void _rpmalloc_global_set_reserved_spans(span_t *master, span_t *reserve,

|

|

size_t reserve_span_count) {

|

|

_memory_global_reserve_master = master;

|

|

_memory_global_reserve_count = reserve_span_count;

|

|

_memory_global_reserve = reserve;

|

|

}

|

|

|

|

////////////

|

|

///

|

|

/// Span linked list management

|

|

///

|

|

//////

|

|

|

|

//! Add a span to double linked list at the head

|

|

static void _rpmalloc_span_double_link_list_add(span_t **head, span_t *span) {

|

|

if (*head)

|

|

(*head)->prev = span;

|

|

span->next = *head;

|

|

*head = span;

|

|

}

|

|

|

|

//! Pop head span from double linked list

|

|

static void _rpmalloc_span_double_link_list_pop_head(span_t **head,

|

|

span_t *span) {

|

|

rpmalloc_assert(*head == span, "Linked list corrupted");

|

|

span = *head;

|

|

*head = span->next;

|

|

}

|

|

|

|

//! Remove a span from double linked list

|

|

static void _rpmalloc_span_double_link_list_remove(span_t **head,

|

|

span_t *span) {

|

|

rpmalloc_assert(*head, "Linked list corrupted");

|

|

if (*head == span) {

|

|

*head = span->next;

|

|

} else {

|

|

span_t *next_span = span->next;

|

|

span_t *prev_span = span->prev;

|

|

prev_span->next = next_span;

|

|

if (EXPECTED(next_span != 0))

|

|

next_span->prev = prev_span;

|

|

}

|

|

}

|

|

|

|

////////////

|

|

///

|

|

/// Span control

|

|

///

|

|

//////

|

|

|

|

static void _rpmalloc_heap_cache_insert(heap_t *heap, span_t *span);

|

|

|

|

static void _rpmalloc_heap_finalize(heap_t *heap);

|

|

|

|

static void _rpmalloc_heap_set_reserved_spans(heap_t *heap, span_t *master,

|

|

span_t *reserve,

|

|

size_t reserve_span_count);

|

|

|

|

//! Declare the span to be a subspan and store distance from master span and

|

|

//! span count

|

|

static void _rpmalloc_span_mark_as_subspan_unless_master(span_t *master,

|

|

span_t *subspan,

|

|

size_t span_count) {

|

|

rpmalloc_assert((subspan != master) || (subspan->flags & SPAN_FLAG_MASTER),

|

|

"Span master pointer and/or flag mismatch");

|

|

if (subspan != master) {

|

|

subspan->flags = SPAN_FLAG_SUBSPAN;

|

|

subspan->offset_from_master =

|

|

(uint32_t)((uintptr_t)pointer_diff(subspan, master) >>

|

|

_memory_span_size_shift);

|

|

subspan->align_offset = 0;

|

|

}

|

|

subspan->span_count = (uint32_t)span_count;

|

|

}

|

|

|

|

//! Use reserved spans to fulfill a memory map request (reserve size must be

|

|

//! checked by caller)

|

|

static span_t *_rpmalloc_span_map_from_reserve(heap_t *heap,

|

|

size_t span_count) {

|

|

// Update the heap span reserve

|

|

span_t *span = heap->span_reserve;

|

|

heap->span_reserve =

|

|

(span_t *)pointer_offset(span, span_count * _memory_span_size);

|

|

heap->spans_reserved -= (uint32_t)span_count;

|

|

|

|

_rpmalloc_span_mark_as_subspan_unless_master(heap->span_reserve_master, span,

|

|

span_count);

|

|

if (span_count <= LARGE_CLASS_COUNT)

|

|

_rpmalloc_stat_inc(&heap->span_use[span_count - 1].spans_from_reserved);

|

|

|

|

return span;

|

|

}

|

|

|

|

//! Get the aligned number of spans to map in based on wanted count, configured

|

|

//! mapping granularity and the page size

|

|

static size_t _rpmalloc_span_align_count(size_t span_count) {

|

|

size_t request_count = (span_count > _memory_span_map_count)

|

|

? span_count

|

|

: _memory_span_map_count;

|

|

if ((_memory_page_size > _memory_span_size) &&

|

|

((request_count * _memory_span_size) % _memory_page_size))

|

|

request_count +=

|

|

_memory_span_map_count - (request_count % _memory_span_map_count);

|

|

return request_count;

|

|

}

|

|

|

|

//! Setup a newly mapped span

|

|

static void _rpmalloc_span_initialize(span_t *span, size_t total_span_count,

|

|

size_t span_count, size_t align_offset) {

|

|

span->total_spans = (uint32_t)total_span_count;

|

|

span->span_count = (uint32_t)span_count;

|

|

span->align_offset = (uint32_t)align_offset;

|

|

span->flags = SPAN_FLAG_MASTER;

|

|

atomic_store32(&span->remaining_spans, (int32_t)total_span_count);

|

|

}

|

|

|

|

static void _rpmalloc_span_unmap(span_t *span);

|

|

|

|

//! Map an aligned set of spans, taking configured mapping granularity and the

|

|

//! page size into account

|

|

static span_t *_rpmalloc_span_map_aligned_count(heap_t *heap,

|

|

size_t span_count) {

|

|

// If we already have some, but not enough, reserved spans, release those to

|

|

// heap cache and map a new full set of spans. Otherwise we would waste memory

|

|

// if page size > span size (huge pages)

|

|

size_t aligned_span_count = _rpmalloc_span_align_count(span_count);

|

|

size_t align_offset = 0;

|

|

span_t *span = (span_t *)_rpmalloc_mmap(

|

|

aligned_span_count * _memory_span_size, &align_offset);

|

|

if (!span)

|

|

return 0;

|

|

_rpmalloc_span_initialize(span, aligned_span_count, span_count, align_offset);

|

|

_rpmalloc_stat_inc(&_master_spans);

|

|

if (span_count <= LARGE_CLASS_COUNT)

|

|

_rpmalloc_stat_inc(&heap->span_use[span_count - 1].spans_map_calls);

|

|

if (aligned_span_count > span_count) {

|

|

span_t *reserved_spans =

|

|

(span_t *)pointer_offset(span, span_count * _memory_span_size);

|

|

size_t reserved_count = aligned_span_count - span_count;

|

|

if (heap->spans_reserved) {

|

|

_rpmalloc_span_mark_as_subspan_unless_master(

|

|

heap->span_reserve_master, heap->span_reserve, heap->spans_reserved);

|

|

_rpmalloc_heap_cache_insert(heap, heap->span_reserve);

|

|

}

|

|

if (reserved_count > _memory_heap_reserve_count) {

|

|

// If huge pages or eager spam map count, the global reserve spin lock is

|

|

// held by caller, _rpmalloc_span_map

|

|

rpmalloc_assert(atomic_load32(&_memory_global_lock) == 1,

|

|

"Global spin lock not held as expected");

|

|

size_t remain_count = reserved_count - _memory_heap_reserve_count;

|

|

reserved_count = _memory_heap_reserve_count;

|

|

span_t *remain_span = (span_t *)pointer_offset(

|

|

reserved_spans, reserved_count * _memory_span_size);

|

|

if (_memory_global_reserve) {

|

|

_rpmalloc_span_mark_as_subspan_unless_master(

|

|

_memory_global_reserve_master, _memory_global_reserve,

|